In an era where text and image generation have been fundamentally reshaped by large models, voice editing has remained one of the most challenging domains to manipulate as intuitively as “editing a sentence.” This landscape is now shifting with the recent release of Step-Audio-EditX, a new open-source project from StepFun AI.

The ambitious goal of Step-Audio-EditX is to empower developers to “edit the emotion, tone, style, and even breath sounds in speech as directly as modifying a line of text.”

What is Step-Audio-EditX

This model is built upon a 3-billion-parameter Audio Large Language Model (Audio LLM).

It fundamentally reimagines audio editing by transforming it into a controllable process at the token level, analogous to text manipulation, moving beyond traditional waveform signal processing tasks.

Key features of Step-Audio-EditX

1. From “Imitating Voice” to “Precise Control”

Most current zero-shot TTS systems can only replicate emotion, accent, and timbre from short reference audio clips. While these often sound natural, they lack precise controllability. Stylistic cues in the input text are frequently ignored, leading to inconsistent performance, especially in cross-lingual and cross-style tasks.

Step-Audio-EditX takes a radically different approach. Instead of relying on complex disentangled encoder architectures, it achieves controllability by altering the data structure and training objectives. The model is trained on massive datasets of speech pairs and triplets where the text is identical but vocal attributes differ significantly. This enables it to learn how to adjust emotion, style, and paralinguistic signals while keeping the underlying text content unchanged.

2. Dual Codebook Tokenization and 3B Audio LLM Architecture

Step-Audio-EditX builds upon the Step-Audio framework’s Dual Codebook Tokenizer:

- Linguistic Stream: A sampling rate of 16.7 Hz, comprising 1024 tokens.

- Semantic Stream: A sampling rate of 25 Hz, comprising 4096 tokens.

These two streams are interleaved in a 2:3 ratio, effectively preserving prosodic and emotional characteristics of the speech.

Leveraging this foundation, the research team constructed a compact 3-billion-parameter Audio LLM. The model was initialized with a pre-trained text LLM and trained on a mixed corpus with a 1:1 ratio of text to audio tokens. It can accept either text or audio tokens as input and consistently generates a sequence of dual codebook tokens.

Audio reconstruction is handled by a separate decoder: a Flow Matching Diffusion Transformer module predicts the mel-spectrogram, which is then converted into a waveform by a BigVGANv2 vocoder. This entire module was trained on 200,000 hours of high-quality speech data, significantly enhancing the naturalness of timbre and prosody.

Step-Audio-Edit-Test: The AI Evaluation Standard

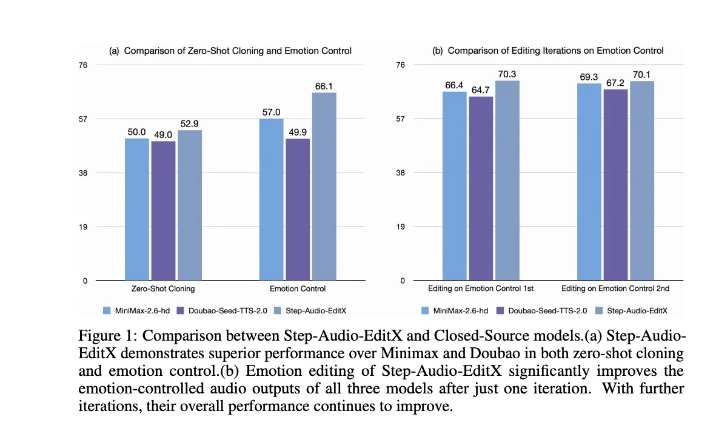

To quantitatively measure control capabilities, the team introduced the Step-Audio-Edit-Test benchmark. It employs Gemini 2.5 Pro as an evaluator, assessing performance across three dimensions: emotion, style, and paralinguistics.

The results demonstrate significant improvements:

- Chinese emotion accuracy increased from 57.0% to 77.7%.

- Style accuracy rose from 41.6% to 69.2%.

- Similar performance gains were observed for English tasks.

The average score for paralinguistic editing also jumped from 1.91 to 2.89, approaching the level of mainstream commercial systems. Remarkably, Step-Audio-EditX was shown to significantly enhance the output of closed-source systems like GPT-4o mini TTS and ElevenLabs v2.

Final Words on Step-Audio-EditX

Step-Audio-EditX represents a genuine leap forward in controllable speech synthesis. By abandoning traditional waveform-level signal manipulation in favor of a discrete token-based foundation, combined with large-margin learning and reinforcement optimization, it brings the fluidity of voice editing closer than ever to the experience of editing text.

In a significant move for the research community, StepFun AI has chosen to open-source the entire stack, including model weights and training code. This dramatically lowers the barrier to entry for voice editing research. It heralds a future where developers can precisely control the emotion, tone, and paralinguistic features of speech with the same ease as modifying written text.

Read More: Firecrawl Launches New Branding Format API