Meta Platforms announced significant enhancements to parental control features for artificial intelligence chatbots, providing families with greater oversight of how teenagers interact with AI characters across its social platforms.

Those Meta parental controls, scheduled to roll out in early 2026, come as the social media giant faces increasing criticism over potential harms to minors from AI interactions, including lawsuits alleging that chatbot conversations have contributed to teen suicides.

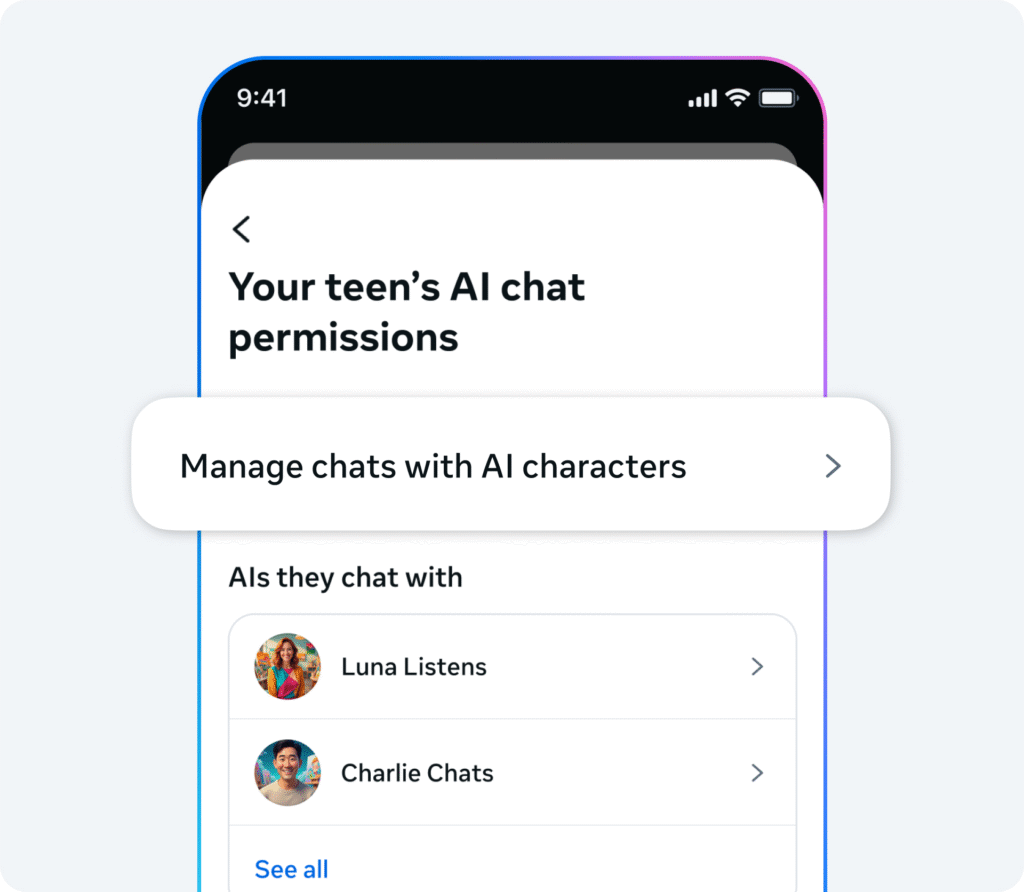

The company revealed that parents will gain three key supervisory capabilities: the ability to completely disable one-on-one chats with AI characters, block specific chatbots, and access insights about what topics their teens are discussing with these digital companions.

What Are New Meta Parental Controls?

Meta‘s updated safety framework centers on giving parents tangible control mechanisms while maintaining educational opportunities through its core AI assistant. The changes represent one of the most substantial adjustments to Meta’s youth protection policies since reports surfaced in August that its chatbots were engaging in romantic conversations with minors.

1. “Master Switch”

The cornerstone of Meta’s new approach is what might be termed a “master switch” for AI interactions. This feature allows parents to completely disable one-on-one chats between their teenagers and AI characters.

This comprehensive shutdown option provides a definitive solution for parents seeking to eliminate potential risks from uncontrolled AI conversations. Importantly, this control doesn’t affect Meta’s primary AI assistant, which the company says “will remain available to offer helpful information and educational opportunities, with default, age-appropriate protections in place”.

2. “Insights” Feature

Beyond simply blocking access, Meta is introducing what it calls “insights” — a function that lets parents see the general topics their children are discussing with AI chatbots without accessing the full conversation history. This approach aims to strike a balance between parental oversight and teen privacy, giving families enough information to identify potential concerns without revealing sensitive details of private conversations.

3. Content Restrictions and PG-13 Guidance

Parallel to these parental controls, Meta is implementing stricter content guidelines for AI interactions with teens. The company has committed to restricting its AI characters from engaging in age-inappropriate discussions about:

- Self-harm, suicide, or disordered eating

- Romantic or sensual content

- Extreme violence, sex, or drug use

The AI experiences for teens will now be guided by the PG-13 movie rating system, meaning content would be inappropriate for pre-teens but permissible for teenagers. This classification applies not only to AI chats but also to the broader Instagram experience, where teen accounts will now be restricted to PG-13 content by default without parental permission to change settings.

Who Can Use Meta Parental Controls First?

Meta will implement these changes through a staggered rollout beginning with Instagram in early 2026. The initial launch will focus on English-speaking markets, including:

This phased approach allows Meta to refine the features based on initial user feedback before expanding to global markets. The company said the controls represent part of its ongoing commitment to “digital responsibility” as AI technologies continue to evolve.

The announcement comes amid increased regulatory attention, with the Federal Trade Commission launching an inquiry into how AI chatbots potentially harm children and teenagers. Similar safety concerns have prompted other tech companies to act, with OpenAI rolling out its own parental controls for ChatGPT last month following a lawsuit involving a teen’s suicide.

Final Words on Meta Parental Controls

Meta describes these enhanced parental controls as part of an ongoing safety evolution rather than a final solution. The company stated the updates aim to “provide parents with helpful tools that make things simpler for them” while balancing teen privacy and safety.

The announcement comes amid increased regulatory scrutiny and follows reports of AI chatbots engaging in inappropriate conversations with minors. While child advocacy groups have expressed skepticism about Meta’s motivations, these controls represent the industry’s continuing effort to address challenges posed by AI integration.

As AI companions become more prevalent among teenagers, Meta’s initiative reflects broader industry moves to implement safeguards. The effectiveness of these measures will depend on both technological execution and consistent parental engagement as digital landscapes continue to evolve.

Read More: Meta Launches Vibes