In a significant move for the AI safety landscape, OpenAI has launched two new open-weight models: gpt-oss-safeguard-120b and gpt-oss-safeguard-20b.

These models are specifically engineered to meet the escalating demand for adaptable and transparent AI safety solutions. As an extension of the established gpt-oss series, they provide specialized functionalities for content classification and moderation, all governed by custom, user-defined policies.

Key features of gpt-oss-safeguard

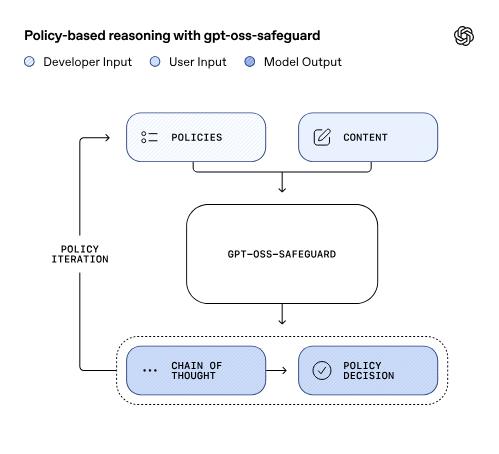

The gpt-oss-safeguard models introduce a paradigm shift in how AI safety can be implemented. Their core innovation is the capacity to process and reason about custom safety policies during inference, moving beyond the constraints of static, pre-baked safety definitions.

- Custom Policy-Driven Classification: Unlike conventional models with fixed safety parameters, this family allows developers to input their own detailed safety guidelines directly alongside the content requiring analysis. The model then performs classification based entirely on these provided rules, creating a dynamic and highly customizable safety layer.

- Reasoning and Explainability: A standout feature is the model’s explanatory output. It doesn’t merely deliver a binary “safe/unsafe” verdict; it provides the reasoning behind its decision. This transparency is crucial for auditing, debugging safety systems, building trust with end-users, and helping safety teams understand the “why” behind each flag.

- Flexible Policy Iteration: Since the safety policy is applied at the inference stage, developers can rapidly test, adjust, and refine their guidelines without the prohibitive cost and time investment of retraining the entire model. This agility is essential for keeping pace with new and evolving online threats or changing community standards.

- Versatile Content Classification: The models are designed to handle a wide array of textual formats. This includes single user prompts, lengthy responses generated by large language models (LLMs), and complete multi-turn conversation histories, making them suitable for diverse moderation scenarios.

What is gpt-oss-safeguard used for

OpenAI has identified several practical applications where gpt-oss-safeguard offers distinct advantages over traditional, fixed classifiers:

- Rapid Adaptation to Evolving Harms: In the face of novel or fast-mutating online risks, platforms can deploy an immediate response by simply updating their written policy. The model’s ability to interpret and enforce new rules in real-time is a game-changer for proactive safety management.

- Niche and Complex Domains: For specialized industries—such as legal, medical, or financial services—where context is king and harm is nuanced, the model’s reasoning capabilities excel. It can parse complex scenarios that would confound a simpler classifier with limited contextual understanding.

- Overcoming Data Scarcity: Developing a high-performance traditional classifier typically requires access to massive, meticulously labeled datasets. gpt-oss-safeguard bypasses this bottleneck, enabling organizations to enforce sophisticated safety standards based solely on well-articulated policies, even in the absence of extensive training data.

- High-Stakes Auditing and Compliance: In environments where decision quality, accuracy, and a clear audit trail are paramount over raw speed—such as in regulatory compliance or critical Trust & Safety operations—these models are ideal. The explainable output provides the necessary documentation for reviews and legal scrutiny.

Limitations of gpt-oss-safeguard

While a powerful advancement, gpt-oss-safeguard is a specialized instrument, not a wholesale replacement for all existing safety infrastructure. OpenAI is transparent about its current constraints:

- Performance Compared to Highly-Tuned Classifiers: For specific, high-risk classification tasks, a traditional classifier that has been exhaustively trained on hundreds of thousands of domain-specific, hand-labeled examples may still achieve higher precision and recall. Organizations with ample resources for data labeling might find a dedicated, fine-tuned model more accurate for their most critical needs.

- Latency and Resource Consumption: The sophisticated reasoning and policy interpretation processes are computationally intensive. This results in higher latency and resource usage, making gpt-oss-safeguard less suited for ultra-high-throughput, real-time content filtering where millisecond-level response times are mandatory. For initial, large-volume screening, more lightweight classifiers remain the practical choice.

Final Thoughts on gpt-oss-safeguard

The debut of gpt-oss-safeguard is a landmark event, representing OpenAI’s first suite of open safety models developed through direct collaboration with the community. This practical approach involved early testing and feedback cycles with trust and safety experts from partners like ROOST and Discord, ensuring the tools are robust and applicable to real-world challenges.

By providing open access to the model weights under a permissive license, OpenAI is democratizing advanced AI safety. This empowers developers and organizations to construct safety layers that are perfectly aligned with their unique legal frameworks, ethical principles, and product ecosystems.

The gpt-oss-safeguard-120b and gpt-oss-safeguard-20b models are now available for public download on the Hugging Face platform. This release invites the global AI community to integrate, experiment with, and advance this next-generation, policy-driven approach to creating a safer AI environment for all.

Read More: OpenAI Introduces ChatGPT Parental Controls