August 7, 2025 – OpenAI officially released GPT-5, marketed as “the smartest, fastest, and most useful” AI model ever. Within hours, global attention exploded. Two days earlier, on August 5, Anthropic had countered by unveiling Claude Opus 4.1. The ultimate showdown—GPT-5 vs Claude 4.1—has begun. AGIYes compares the two giants from both everyday-user and developer perspectives to help you choose the AI models that best fits your needs.

GPT-5 vs Claude 4.1:Everyday-User Perspective

For non-technical users, ease of use, price, and safety usually come first. On these three fronts, GPT-5 and Claude 4.1 show strikingly different characteristics.

GPT-5 vs Claude 4.1 – Ease of Onboarding

GPT-5 continues OpenAI’s “works-out-of-the-box” philosophy; no setup is required. Inside ChatGPT, the system auto-selects either the “fast model” or the “deep-reasoning model” based on question complexity, sparing users from manual switching. This seamless experience makes GPT-5 the go-to for tech novices.

Claude 4.1’s entry points are more fragmented—Slack, web, or a standalone app. Although Anthropic offers API and cloud integrations, the multiple gateways raise the learning curve for ordinary users. Still, power users who like to “tinker” may prefer Claude’s Workbench sandbox.

GPT-5 vs Claude 4.1 – Pricing

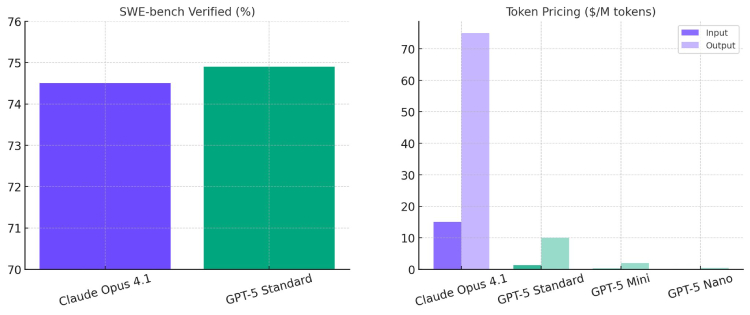

OpenAI adopted an aggressive pricing strategy: stronger performance at lower cost. Free users get basic GPT-5 (with limits). Plus (20/mo) and Pro (200/mo) tiers unlock higher usage caps. API costs drop to 1.25 per million input tokens and 10 per million output tokens—50 % cheaper than the prior generation

Claude 4.1 also offers free access, but Anthropic has throttled high-frequency usage due to rising costs. Even the 200/mo plan caps Opus 4.1 usage at 24–40 hours. API pricing is 0.008 per 1 k input tokens and 0.024 per 1 k output tokens, making it pricier than GPT-5. One plus: Claude 4.1’s 200 k token context window remains advantageous for very long documents.

GPT-5 vs Claude 4.1 – Safety

On content safety, the two models diverge. GPT-5 introduces “Safe Completions,” which no longer flat-out refuse sensitive queries but attempt safe, hedged answers. Tests show a clear safety jump over GPT-4, yet carefully crafted adversarial prompts can still slip through.

Claude 4.1 keeps Anthropic’s strict filtering: violence, illegal activity, and similar topics trigger an immediate refusal, achieving a 98.76 % harmless-response rate under Constitutional AI. This “better safe than sorry” stance can feel rigid and occasionally over-cautious.

GPT-5 vs Claude 4.1:Developer Perspective

For developers, raw performance, cost, and controllability directly affect project success. GPT-5 and Claude 4.1 each shine—and stumble—on these professional metrics.

GPT-5 vs Claude 4.1 – Coding Ability

On SWE-bench, GPT-5 sets a new record with 74.9 % resolution, slightly edging Claude 4.1 at 74.5 %. Real-world tests show GPT-5 can one-shot generate full 3-D games and financial dashboards, scoring 88 % on the Aider Polyglot cross-language editing benchmark.

Claude 4.1 trails slightly overall yet excels in niche areas. Its 3-D billiards game and Tetris clone are not only functional but visually “Apple-designer-level.” On the Terminal-bench suite, Claude 4.1 leads with 43.2 %, making it better for sys-admin tasks.

GPT-5 vs Claude 4.1 – Toolchain & Ecosystem

OpenAI’s ecosystem is mature. The new Assistants API supports multi-turn dialogues, file uploads, code interpreters, and more. A rich plugin marketplace integrates GitHub Copilot, Zapier, Notion AI, and others. Developers can tune answer depth with the reasoning_effort parameter.

Claude 4.1’s toolchain is more focused. The Workbench sandbox gives an ideal debugging playground. Hybrid reasoning modes (Instant vs Extended) let developers trade speed for depth on demand.

GPT-5 vs Claude 4.1 – Compliance

Claude 4.1 wins on compliance. Via partners, it offers SOC2/HIPAA endpoints—for example, Hathr.AI’s deployment on AWS GovCloud is fully HIPAA-compliant, FedRAMP High certified, and BAA-signed, attractive to medical or financial sectors.

GPT-5 also offers enterprise-grade compliance, but HIPAA/SOC2 coverage requires a separate agreement. While “Safe Completions” cut hallucination rates by 45 %, adversarial tests can still bypass it, posing challenges for high-security use cases.

Conclusion: No Best, Only Best-Fit

Overall, GPT-5 and Claude 4.1 each excel in different arenas.

GPT-5 is the undisputed “all-round champion,” leading most benchmarks and delivering a leap in coding prowess. Its “more for less” pricing and idiot-proof UX make it the default for everyday users and budget-minded developers. Rapid iteration also underscores OpenAI’s AGI ambitions.

Claude 4.1 is the “specialist ace,” shining in code quality, terminal tasks, and strict safety. Mature compliance makes it the safer bet for enterprise. Teams that prize data security and organizational alignment will likely prefer Claude 4.1.

Ultimately, the choice hinges on your exact needs. As AI experts put it: “In the era of large models, the key is not chasing the latest model but finding the tool that best fits your purpose.” Whichever you pick, we are witnessing AI reshape the world at breathtaking speed.