In a major development for AI builders, Vercel has announced the general availability of its AI Gateway—a unified platform that allows developers to seamlessly integrate and manage hundreds of AI models through a single API endpoint.

This release addresses longstanding challenges such as fragmented API management and multi-vendor complexity, offering a streamlined alternative for teams building AI-powered applications.

The introduction of the AI Gateway signals a shift toward consolidated tooling in the rapidly evolving AI infrastructure space. It promises to reduce overhead and improve reliability for developers working across multiple model providers.

Below we take a closer look at what Vercel AI Gateway offers, who it’s for, and how it stands out in a competitive marketplace.

What is Vercel AI Gateway?

The Vercel AI Gateway serves as an abstraction layer that standardizes access to AI models from leading providers—including OpenAI, Anthropic, Google, xAI, and others—through one consistent API. By acting as a centralized proxy, it eliminates the need to manage separate API keys, vendor-specific SDKs, or custom integration logic for each service.

Built with performance and resilience in mind, the gateway is engineered to maintain low latency and high availability even during provider outages. It allows developers to focus on building product features rather than managing infrastructure, making it easier to scale AI applications in production.

Key Features of Vercel AI Gateway

1. Unified Access to Hundreds of AI Models

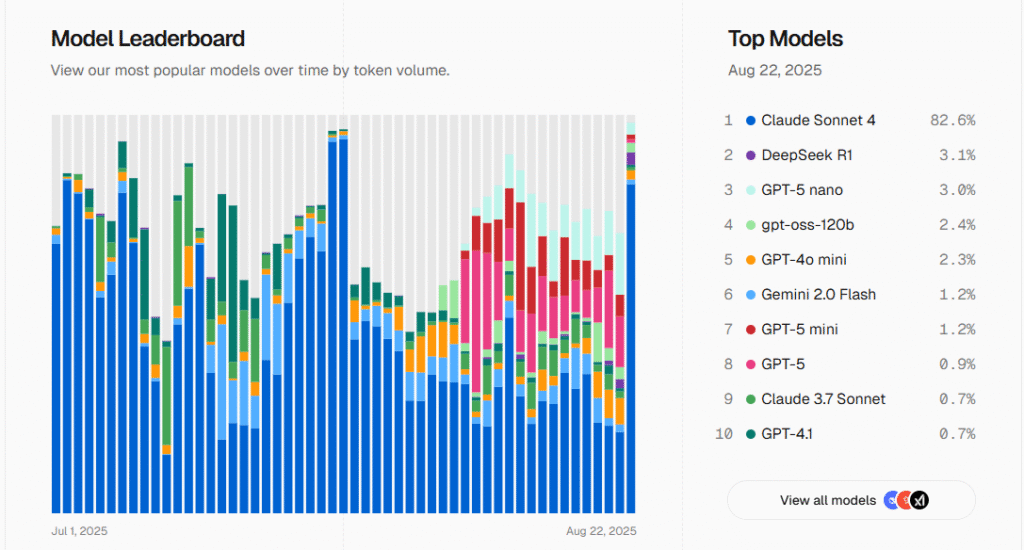

A key highlight of the Vercel AI Gateway is its support for a vast array of AI models, including popular and cutting-edge options like GPT-5 and Claude Sonnet 4. Developers no longer need to configure separate API keys or manage different vendor accounts for each model.

Instead, a single interface provides seamless access, drastically lowering the barrier to entry for building multi-model applications. This flexibility enables developers to quickly test and switch between models to adapt to the fast-paced evolution of AI technology.

2. High Performance and Reliability

Vercel AI Gateway is engineered for exceptional performance, boasting latency as low as 20 milliseconds. This low-latency design is crucial for real-time AI applications, ensuring a smooth user experience. The platform also includes an automatic failover mechanism that seamlessly switches to an alternative provider if one service experiences an outage or rate-limiting issues.

This built-in redundancy guarantees high availability for applications. Furthermore, the gateway’s high-throughput design is built to handle large-scale AI workloads, making it production-ready.

3. Seamless Integration with the AI SDK and OpenAI Compatibility

To maximize developer efficiency, the Vercel AI Gateway is deeply integrated with the Vercel AI SDK v5, which provides a clean and simple TypeScript toolkit. The SDK supports major frameworks like React, Next.js, Vue, Svelte, and Node.js. With this integration, developers can switch models or providers by simply updating a single line of code, without having to rewrite core application logic.

Additionally, the platform is fully compatible with the OpenAI API format. This allows existing applications built on OpenAI’s infrastructure to easily migrate to the Vercel AI Gateway, significantly reducing technical overhead and transition costs.

4. Zero Markup Pricing and BYOK Support

When it comes to cost, Vercel AI Gateway is committed to a zero-markup pricing model. This means developers pay the exact same market price as the original provider, with no extra fees from Vercel.

The platform also supports a “Bring Your Own Key” (BYOK) feature, giving developers the flexibility to use their own API keys, which enhances transparency and control over their spending. This straightforward pricing strategy not only reduces the financial burden on developers but also strengthens the platform’s competitive edge.

Who Should Use Vercel AI Gateway?

The Vercel AI Gateway is ideal for a wide range of developers and organizations:

- Startups and Indie Developers: It simplifies the process of integrating AI, allowing small teams to experiment with and deploy powerful AI features quickly without the complexity of managing multiple accounts.

- Enterprises: Large organizations can use the gateway to standardize their AI infrastructure, ensuring consistency and reliability across different teams and projects. The built-in failover and performance features are critical for enterprise-level applications.

- AI Researchers and Enthusiasts: The platform provides a perfect sandbox for comparing the performance and output of different models side-by-side, accelerating research and development.

- Teams building on Vercel: For developers already using Vercel for their front-end and back-end needs, the AI Gateway offers a native, high-performance solution that seamlessly integrates with their existing stack.

How Does Vercel AI Gateway Compare to Competitors?

The AI Gateway enters a competitive field of tools aiming to simplify LLM integrations. Key alternatives include:

- LangChain: A popular open-source framework for composing LLM-based applications. While highly flexible, it often requires more setup and orchestration compared to Vercel’s managed gateway service.

- Hugging Face Inference Endpoints: Provides dedicated hosting for transformer-based models but focuses less on unifying access across multiple commercial API providers.

- Gradio: Primarily used for demoing and prototyping models, rather than production-grade API aggregation.

- Direct Integrations: Some teams still integrate individually with each vendor’s API, but this approach lacks unified logging, automatic retries, and provider failover.

Vercel distinguishes itself through its emphasis on integration with modern web frameworks, performance optimization, and a developer-friendly experience—especially for those already in the Vercel ecosystem.

Conclusion on Vercel AI Gateway

The launch of Vercel AI Gateway marks a notable advancement in how developers consume and manage external AI models. By offering a unified, performant, and cost-transparent entry point to hundreds of models, Vercel is reducing barriers to building and scaling AI applications.

As the AI industry continues to expand and diversify, tools that simplify complexity without sacrificing performance will become increasingly vital. Vercel’s offering is well-positioned to become a key infrastructure component for teams focused on shipping AI-native experiences quickly and reliably.