August 27, 2025 — ByteDance’s digital human team released its multimodal digital human solution, OmniHuman-1.5. As an upgraded version of OmniHuman-1, the framework converts a single image and an audio clip into highly realistic dynamic video. The generated characters not only achieve precise lip-sync but also display emotional expression and semantically coherent gestures.

OmniHuman-1.5’s core breakthrough lies in giving digital humans the power to “think”: a multimodal large language model analyzes the deep semantics and emotional undertones of the audio, producing vivid animations that are both physically plausible and contextually consistent. The much-anticipated upgrade is now live on GitHub at https://omnihuman-lab.github.io/v1_5/.

Core Features of OmniHuman-1.5: Giving Digital Humans a “Soul”

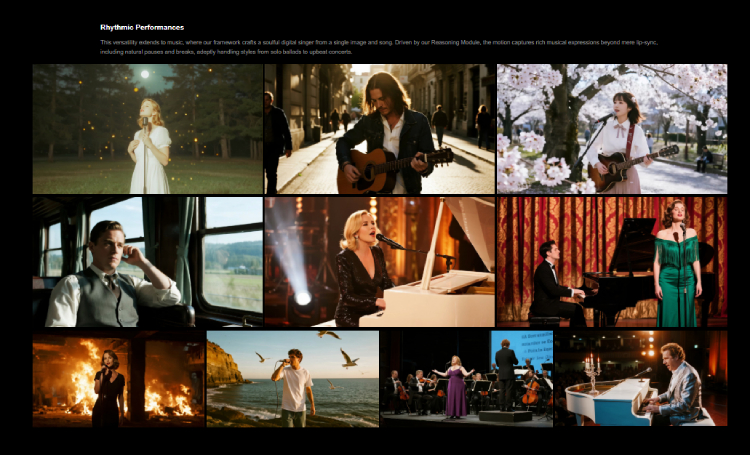

OmniHuman-1.5’s strength lies in several breakthrough features that endow digital humans with unprecedented “vitality” and “expressiveness,” turning them from stiff avatars into “digital actors” that can blend into complex scenarios.

- Dual-audio drive, effortlessly handling multi-person dialogue: Whether you provide mono or complex dual-channel audio, OmniHuman-1.5 can accurately synchronize lip movements and gestures with the soundtrack. This provides a reliable solution for two-person conversations, chorus scenes in music videos, and other complex setups, greatly expanding application boundaries.

- Long-form video generation, identity remains stable throughout: Traditional digital human tech often suffers from identity drift and frame jitter in long videos. Thanks to superior algorithms, OmniHuman-1.5 can produce coherent videos over 60 seconds long. Whether it’s a lengthy speech or a full MV, the digital human’s appearance stays consistent—no “face collapse.”

- Emotion perception, synchronizing expressions and movements with mood: Advanced emotion-recognition algorithms automatically parse emotional cues in the audio and translate them into matching facial and body movements. Whether it’s an angry shout, a joyful laugh, or a sorrowful whisper, the digital human responds with precise emotional feedback, greatly enhancing video impact.

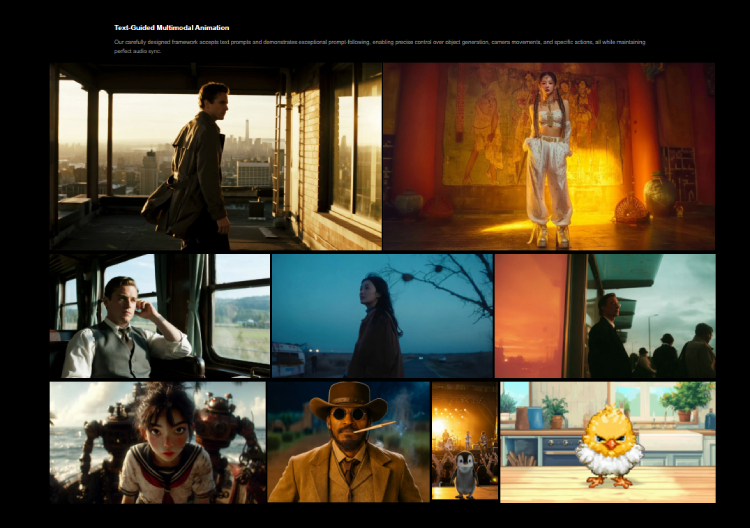

- Text prompts, customizing your “digital actor”: This feature grants creators unprecedented freedom. Simple text prompts such as “handheld camera, fireworks backdrop, lonely mood” let you personalize the scene, camera work, and actions, making video creation as easy as writing a script.

- Multi-style support, everything can be “digitized”: OmniHuman-1.5 handles not only photorealistic styles but also anime, 3D cartoons, and even animals. Its potential in game NPCs, virtual idols, and AR pets is limitless, offering a powerful tool for building metaverse worlds.

- Intelligent camera work, delivering cinematic language: From smooth push-pull shots and expressive pans to dramatic orbitals, OmniHuman-1.5 automates it all. Even ordinary users can produce movie-quality footage without complex professional knowledge.

Technical Highlights: Unpacking the “Dual-System” Architecture

OmniHuman-1.5’s breakthroughs stem from its unique “dual-system” architecture, ingeniously integrating two cutting-edge AI technologies that each play distinct roles while collaborating seamlessly.

- System 1 (fast thinking): Uses a diffusion model to handle rapid, intuitive motion generation. It quickly captures transient audio cues and converts them into fluid, natural movements—akin to human “reflexes.”

- System 2 (slow thinking): Built on a multimodal large model, it performs deep semantic and emotional analysis plus long-term planning. It understands the underlying meaning of the audio, mapping out overall motion flows that match emotional arcs and scene settings, ensuring coherence and logic.

Additionally, OmniHuman-1.5 introduces a “hybrid motion-conditioning” training strategy, making the digital human’s movements more natural and ensuring stable cross-style generation. Powerful “inter-frame linking” technology eliminates frame skipping and face collapse in long videos, guaranteeing high stability and consistency.

Application Scenarios: Reshaping Digital Futures Across Industries

OmniHuman-1.5 opens new vistas for multiple sectors:

- Film & animation: Directors can use OmniHuman-1.5 to quickly generate virtual actors for reshoots, slashing production cycles and cutting costs by over 50%, enabling more creativity.

- Virtual hosts: Whether livestream sales or 24/7 customer service, OmniHuman-1.5-based virtual hosts provide stable, efficient service, boosting user engagement and satisfaction.

- Education & training: Teachers can generate digital lecturers with rich body language, making lessons livelier and raising course-completion rates.

- Advertising & marketing: Brands can craft exclusive IP figures and use OmniHuman-1.5 to rapidly generate marketing content, deepening consumer recall.

- Gaming/VR/AR: OmniHuman-1.5 can drive NPCs and avatars in real time, delivering unprecedented immersive experiences.

Conclusion: Challenges & Outlook

Despite impressive achievements, OmniHuman-1.5 still faces challenges such as occasional artifacts in complex object interactions and high compute requirements. ByteDance’s roadmap includes finer motion control, model compression, and mobile deployment.

As OmniHuman-1.5 continues to evolve, digital humans will move beyond sci-fi into everyday life, becoming a new extension of human creativity. A future AI-driven digital world full of infinite possibility is accelerating toward us.