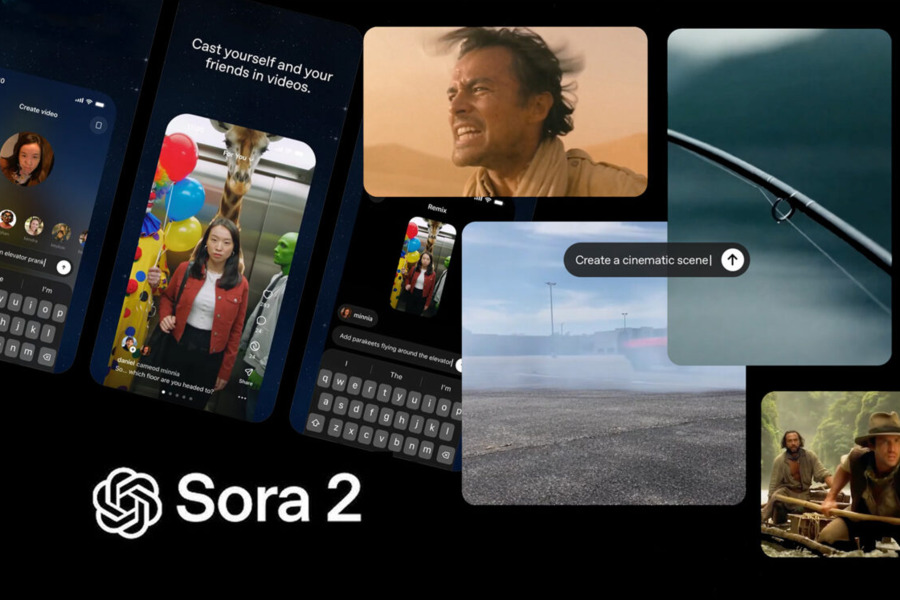

OpenAI has officially launched Sora 2 API, marking a significant milestone in making advanced AI video generation accessible to developers worldwide. This release, announced during OpenAI’s Dev Day event, represents a strategic expansion of the company’s developer ecosystem and provides programmers with direct access to the same powerful technology that powers Sora 2’s stunning video outputs.

The Sora 2 model sets a new standard for AI-generated content, producing richly detailed, dynamic video clips complete with synchronized audio based on natural language prompts or reference images. This launch signals OpenAI’s commitment to transforming video creation from a specialized skill to a programmable resource available through simple API calls.

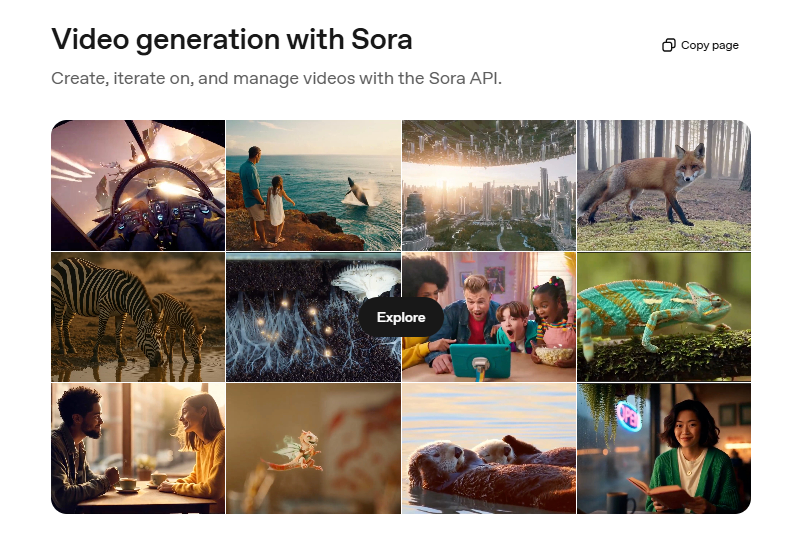

Introducing Sora 2 API

OpenAI has structured the Sora 2 API around five core endpoints that provide a comprehensive framework for video generation and management. This streamlined structure offers developers a robust and flexible system to integrate state-of-the-art video generation directly into their applications and services.

The API handles the complete video generation workflow through these dedicated endpoints:

- Create Video: This primary endpoint initiates new rendering tasks, allowing developers to start with a text prompt and optionally add a reference image or “remix” ID to guide the generation process

- Get Video Status: Through this essential endpoint, users can query the current progress of rendering tasks, enabling real-time monitoring of job status—particularly valuable given the computational intensity of high-quality video generation

- Download Video: Once generation is complete, this endpoint facilitates retrieval of the final produced content as an MP4 file

- List Videos: For efficient organization and management, this endpoint provides a paginated view of a user’s historical video records, invaluable for showcasing past work and simplifying asset management in larger projects

- Delete Video: This maintenance endpoint allows users to remove specific videos from OpenAI’s storage, helping manage storage consumption and ensure data privacy

The API leverages a sophisticated multimodal model that excels in 3D spatial understanding, motion modeling, and scene continuity. One of its most significant advancements is native audio-video synchronization, which automatically generates dialogue synchronized with character lip movements, scene-appropriate sound effects, and fitting background music.

Sora 2 API’s Two Key Variants

To accommodate diverse project requirements, OpenAI offers the Sora 2 API in two distinct variants, each designed for specific use cases and quality expectations.

1. Sora 2 (Standard)

The standard variant prioritizes speed and flexibility, making it ideal for rapid prototyping, creative exploration, and high-volume content creation. With its faster generation times and quick feedback loops, it’s particularly suited for:

- Social media content production

- Rapid iteration and concept testing

- Applications requiring high-volume video generation

The standard model supports resolutions up to 1280×720 (landscape) or 720×1280 (portrait), balancing quality with accessibility for a wide range of applications.

2. Sora 2 Pro

The professional variant engineers higher quality and fidelity for production environments where visual precision is paramount. It delivers enhanced visual details, improved physical accuracy, and more consistent generation quality, making it the preferred choice for:

- Cinematic footage and film production

- Premium marketing and advertising content

- Brand promotion and high-end commercial videos

Sora 2 Pro supports higher resolutions up to 1792×1024 (landscape) or 1024×1792 (portrait), catering to professional production standards that require sharper visuals and more refined details.

This dual-variant approach allows organizations to strategically select the model that best aligns with their budget, timeline, and quality requirements, from fast-paced daily content creation to high-end final production assets.

Sora 2 Pricing Structure

OpenAI has implemented an innovative per-second billing model for the Sora 2 API, departing from traditional token-based or subscription pricing approaches. This transparent cost structure allows developers to maintain precise control over their video generation expenses based on actual usage.

The pricing tiers for the two models are structured as follows:

- Sora 2 (Standard): All 720p video generation is priced at $0.10 per second, regardless of orientation

- Sora 2 Pro: 720p video generation costs $0.30 per second, while high-definition rendering (1792×1024 or 1024×1792) is priced at $0.50 per second

This tiered pricing strategy enables creators to balance creative ambitions with financial constraints, scaling to the Pro tier only when maximum quality is essential.

To illustrate practical cost implications:

- A 10-second social media clip using Sora 2 would cost approximately $1.00

- A 30-second professional advertisement using Sora 2 Pro at 720p would amount to $9.00

- A 60-second high-definition brand story using Sora 2 Pro’s maximum resolution would total $30.00

For development teams planning larger-scale implementation, the pricing model remains consistently scalable. Generating 100 ten-second videos daily using the standard model would result in a monthly cost of approximately $3,000, while the same volume using Sora 2 Pro’s HD capabilities would reach about $15,000 monthly.

Final Thoughts on Sora 2 API

The official launch of the Sora 2 API solidifies OpenAI’s leadership in the generative AI landscape while potentially reshaping the future of video production. The combination of technical sophistication, flexible model options, and transparent pricing creates unprecedented opportunities for developers and creators across industries.

The true impact of the Sora 2 API will ultimately be determined by the innovative applications that developers build upon it. As adoption grows, we can anticipate a flourishing of creative tools and services that make professional-quality video creation more accessible than ever before. In the evolving landscape of generative AI, OpenAI has not just released another model—it has provided a programmable canvas for the future of visual storytelling.