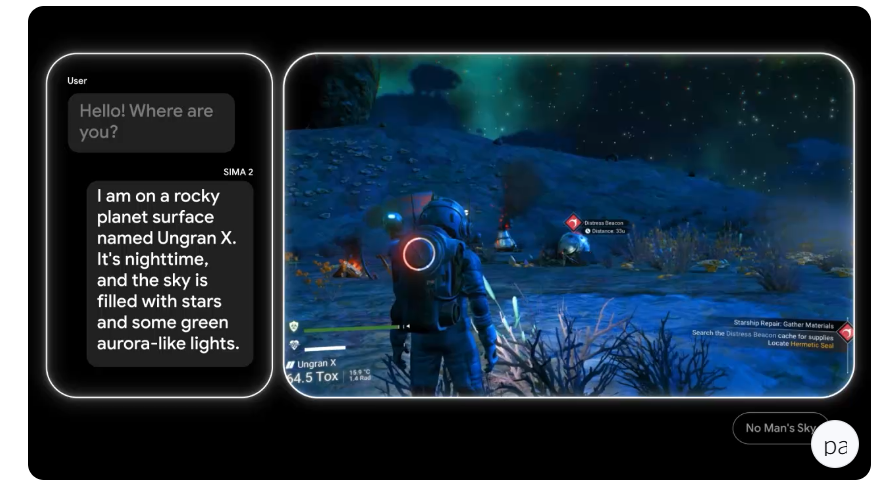

Google DeepMind has unveiled SIMA 2, its latest research project designed to test the capabilities of generalist AI agents within complex, instruction-driven 3D video game environments.

This new iteration of the Scalable, Instructable, Multiworld Agent (SIMA) represents a significant upgrade, integrating the powerful Gemini model to enhance its ability to comprehend goals, interpret and articulate its plans, and improve its performance autonomously across diverse virtual landscapes.

What is SIMA 2

SIMA 2 builds upon the foundation laid by its predecessor, SIMA 1, which was introduced earlier in 2024. The original agent learned to execute over 600 basic language instructions by processing rendered images and emulating keyboard and mouse controls. While a groundbreaking step, SIMA 1 achieved a task completion rate of approximately 31%, notably lower than the 71% success rate demonstrated by human players in the same games.

SIMA 2 retains the same interface but undergoes a fundamental architectural transformation. It now utilizes Gemini 2.5 Flash Lite as its core reasoning engine. This shift moves the agent beyond being a mere instruction-follower; it evolves into a more collaborative game partner that can interact with its environment and the player in a more nuanced and intelligent manner. The integration of a sophisticated large language model marks a pivotal change in how the agent processes information and makes decisions.

Key features of SIMA 2

The core of SIMA 2’s advancement lies in its novel architecture, which deeply integrates the Gemini model. The system receives visual observations from the game screen and natural language instructions from the user. Gemini then processes this information to derive high-level goals and generate a sequence of corresponding in-game actions.

This new training paradigm empowers the SIMA 2 agent with an unprecedented level of transparency and reasoning. It can explain its own intentions, answer questions about its current objectives, and articulate its reasoning process about the dynamic game environment. This interpretability is a crucial step forward for developing trustworthy and reliable AI systems.

The performance metrics speak volumes. In DeepMind’s internal evaluations, SIMA 2’s task completion rate has surged to an impressive 62%, nearly doubling the performance of the first generation and closely approaching the benchmark set by human players. This dramatic improvement underscores the efficacy of the new model-driven approach.

Furthermore, SIMA 2 significantly expands its channels for interpreting instructions. It is no longer limited to text commands. The agent can now process and respond to voice instructions, on-screen graphical cues, and even emojis. In a demonstration of this capability, when a user asked SIMA 2 to “find the house that is the color of a ripe tomato,” the agent successfully reasoned that “a ripe tomato is red” and proceeded to locate a red-colored house within the game world.

A standout feature of SIMA 2 is its capacity for self-improvement. After an initial training phase using human gameplay demonstrations, the agent can enter a new game and rely entirely on its own accumulated experience to learn and adapt. The integrated Gemini model plays a key role here by generating new tasks for the agent and scoring its performance. This creates a virtuous cycle of learning, enabling subsequent versions of the agent to succeed in tasks where previous iterations had failed, all without the need for additional human-provided data.

Final Words on SIMA 2

The research team at DeepMind is also exploring the frontier of AI-generated environments by combining SIMA 2 with Genie 3, a world model that can generate interactive 3D environments from a single image or text prompt. In these entirely novel, AI-generated worlds, SIMA 2 demonstrates its ability to identify objects and successfully complete assigned tasks, proving its generalizability.

The development of SIMA 2 represents more than just an achievement in game-playing AI. It signals a critical milestone on the path toward creating sophisticated, general-purpose agents. The skills honed in these complex, physics-based virtual worlds—such as understanding nuanced language, planning multi-step actions, and learning from autonomous exploration—are foundational for the eventual development of advanced real-world robotics and broader AI applications.

Google DeepMind’s SIMA 2 project firmly establishes that the future of AI agents lies not just in executing commands, but in understanding, reasoning, and collaborating within open-ended environments.

Read More: Google Releases Magika 1.0