Google’s DeepMind research team has introduced Gemma 3 270M, a groundbreaking open-source AI model optimized for efficiency and on-device deployment.

With just 270 million parameters, this model is significantly smaller than today’s multi-billion-parameter large language models (LLMs), yet it delivers impressive performance tailored for smartphones, edge devices, and low-power hardware. Early tests on Google’s Pixel 9 Pro demonstrate its capability to run smoothly offline, making it a game-changer for developers and enterprises.

Keep reading, here is everything you need to know about Gemma 3 270M.

What is Gemma 3 270M?

Gemma 3 270M is engineered for success in complex, domain-specific tasks. Its compact size allows developers to fine-tune it in just a few minutes, adapting it to the specific needs of enterprises or individual creators.

According to Google DeepMind engineers on social media platform X, Gemma 3 270M is not limited to running in a web browser; it can also be used on low-power hardware like the Raspberry Pi and other lightweight devices.

The model’s architecture is a key to its efficiency. It combines 170 million embedding parameters with 100 million transformation block parameters, supporting a massive 256,000-token vocabulary.

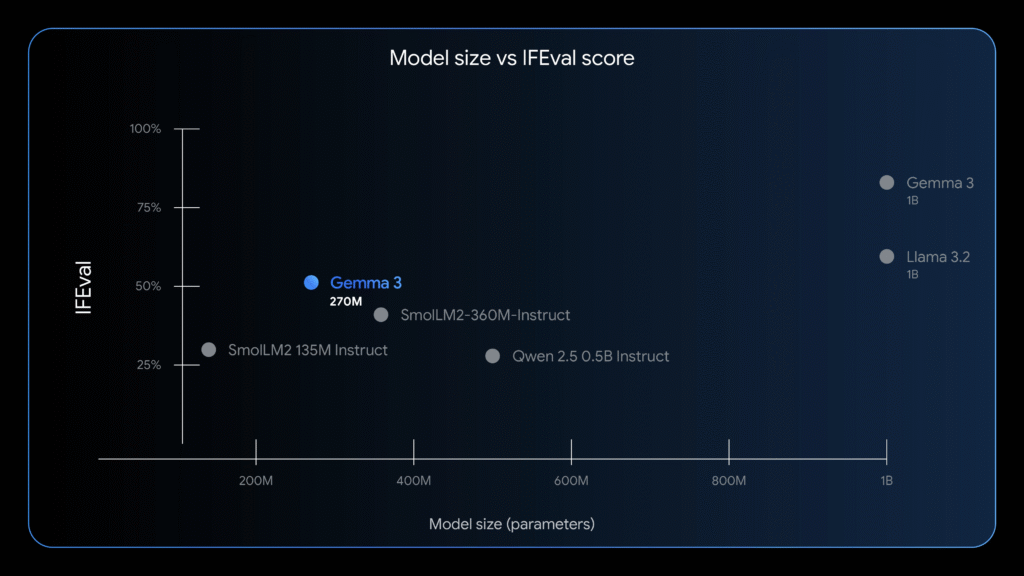

This allows it to handle rare and specialized terminology, making it a powerful tool for niche applications. Google also reports that Gemma 3 270M is highly effective at following instructions, achieving a score of 51.2% on the IFEval benchmark, which outperforms many other compact models in its class.

Key Features of Gemma 3 270M

Gemma 3 270M stands out with several features that make it a game-changer for on-device AI.

1. Massive 256k Vocabulary for Expert Tuning

A significant portion of Gemma 3 270M’s parameters—roughly 170 million—are dedicated to its embedding layer, which supports an enormous 256,000-token vocabulary.

This expansive vocabulary allows the model to process and understand rare and specialized tokens, making it exceptionally well-suited for domain adaptation, handling niche industry jargon, and custom language tasks. This feature is crucial for developers who need to train the model for highly specific use cases, such as legal document analysis or medical transcription.

2. Extreme Energy Efficiency for On-Device AI

Internal benchmarks highlight the model’s remarkable energy efficiency. The INT4-quantized version of Gemma 3 270M consumes less than 1% of the battery on a Pixel 9 Pro for 25 typical conversations.

This makes it the most power-efficient Gemma model released to date. This level of efficiency is a huge win for developers looking to deploy capable AI models on mobile, edge, and embedded devices without compromising battery life or user experience.

3. Production-Ready with INT4 Quantization-Aware Training (QAT)

Gemma 3 270M is pre-configured with Quantization-Aware Training (QAT) checkpoints, enabling it to operate at 4-bit precision with minimal loss in quality. This technical advancement is critical for production environments with limited memory and computational power.

By allowing for local, encrypted inference, the model offers increased privacy and security guarantees, as sensitive user data never needs to be sent to the cloud for processing.

4. Instruction-Following Out of the Box

Available as both a pre-trained and an instruction-tuned model, Gemma 3 270M is designed to understand and follow structured prompts immediately. This means developers can start building useful applications right away.

For further specialization, the model’s behavior can be fine-tuned with just a handful of targeted examples, offering a fast and flexible development workflow.

Fine-Tuning: Workflow & Best Practices

Gemma 3 270M’s small footprint and specialized architecture make it ideal for rapid, expert fine-tuning on focused datasets. The official workflow, as detailed in Google’s Hugging Face Transformers guide, is straightforward and efficient.

- Dataset Preparation: Small, carefully curated datasets are often all that’s needed. For example, teaching a specific conversational style or data format might require as few as 10-20 examples. This low-data requirement drastically reduces the time and resources needed for training.

- Trainer Configuration: By leveraging Hugging Face TRL’s SFTTrainer and configurable optimizers (like AdamW), developers can easily fine-tune and evaluate the model. Monitoring training and validation loss curves helps to prevent issues like overfitting or underfitting.

- Evaluation: Post-training, inference tests show a dramatic ability to adapt to new personas and formats. In this context, overfitting—often a development hurdle—can actually be beneficial. It allows the model to “forget” general knowledge and become hyper-specialized for a highly focused role, such as a roleplaying game NPC, a custom journaling assistant, or a sector-specific compliance checker.

- Deployment: Once fine-tuned, models can be pushed to the Hugging Face Hub and run on local devices, in the cloud, or via Google’s Vertex AI, with near-instant loading times and minimal computational overhead.

When to Choose Gemma 3 270M

Inheriting the advanced architecture and robust pre-training of the broader Gemma 3 collection, Gemma 3 270M provides a strong foundation for custom AI applications. Here’s a quick guide to determine if it’s the right choice for your project.

- High-Volume, Well-Defined Tasks: It is perfect for functions like sentiment analysis, entity extraction, query routing, converting unstructured text to structured data, creative writing, and compliance checks.

- Cost and Speed are Critical: Gemma 3 270M drastically reduces or eliminates inference costs in production and delivers faster responses to users. A fine-tuned model can run on lightweight, inexpensive infrastructure or directly on-device.

- Rapid Iteration and Deployment: The model’s small size allows for swift fine-tuning experiments, enabling you to find the perfect configuration for your use case in hours, not days.

- Privacy is a Priority: Because the model can run entirely on-device, you can build applications that handle sensitive information without ever sending data to the cloud, ensuring maximum user privacy.

- Specialized Task Models: You can create and deploy a fleet of custom models, each expertly trained for a different task, without a massive budget.

When to Choose Gemma 3 270M?

This model is ideal for:

- High-volume, structured tasks (sentiment analysis, entity extraction, compliance checks)

- Cost-sensitive deployments (eliminates cloud inference costs)

- Rapid prototyping (fine-tuning in hours, not days)

- Privacy-focused applications (on-device processing keeps data secure)

- Specialized AI agents (custom chatbots, domain-specific assistants)

Conclusion on Gemma 3 270M

Gemma 3 270M marks a shift toward lean, powerful AI models that don’t sacrifice capability for efficiency. Its open-source nature, low power demands, and rapid fine-tuning make it a versatile tool for developers, enterprises, and hobbyists alike.

As AI moves toward edge computing and on-device processing, models like Gemma 3 270M will play a crucial role in bringing high-performance AI to everyday devices—without relying on massive data centers.

For developers looking to build fast, private, and cost-effective AI solutions, Gemma 3 270M is a compelling choice.