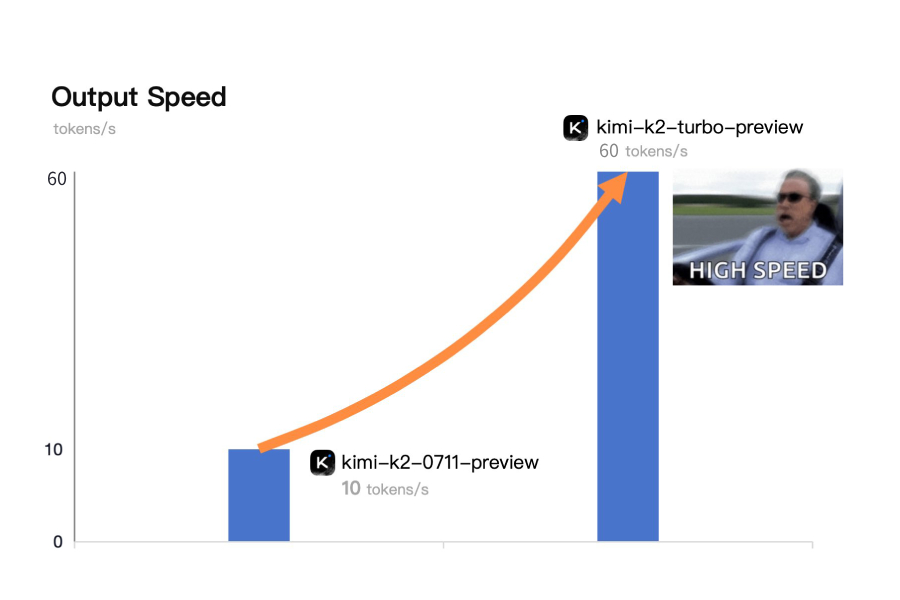

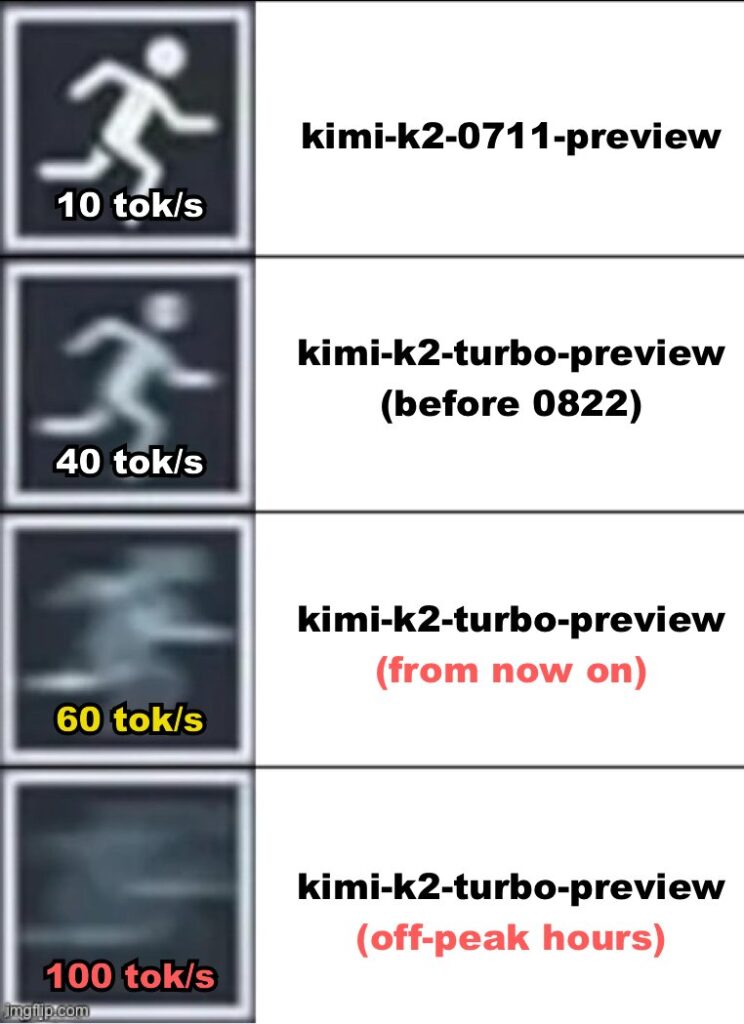

On August 22, Moonshot AI officially announced that its Kimi K2 Turbo Preview model has completed a new round of technical optimization, achieving a significant increase in output speed from the original 40 Tokens per second to a stable 60 Tokens per second, with peak performance reaching up to 100 Tokens per second. This upgrade greatly reduces user waiting time, especially in high-frequency interaction and long-text processing scenarios.

Kimi K2 Turbo Preview Model’s Technical Breakthrough

Before this, many users’ impression of the Kimi K2 Turbo Preview model was that it had “strong long-text processing capabilities, but there was still room for improvement in speed.” This time, Moonshot AI’s technical team clearly put in a lot of effort. They thoroughly solved the pain point of slow long-text generation by deeply optimizing parallel decoding and caching strategies from the ground up. This means that whether you’re processing a long document of tens of thousands of words or performing complex code generation, you can experience a smooth, almost “instant reply” feeling.

In practical tests, this speed improvement is particularly noticeable:

- Stable 60 T/s: In daily tasks like conversations, article continuation, or code completion, you’ll feel almost no waiting, as the content is generated almost synchronously.

- Peak 100 T/s: For short prompts or general tasks, such as quick Q&A or keyword extraction, the Kimi K2 Turbo Preview model can give results in an instant, achieving a true “instantaneous output.”

- 128k Context Window: It’s worth mentioning that even when processing ultra-long documents, this speed advantage is perfectly inherited. As Moonshot AI officially emphasized, the ability to process long documents at high speed is a core competitive advantage of the Kimi series models, and this speed upgrade undoubtedly makes this advantage even more prominent.

Kimi K2 Turbo Preview Model Half-Price for a Limited Time

Along with the speed increase, Moonshot AI also brings a sincere pricing discount. From now until September 1st at midnight, all billing items for the Kimi K2 Turbo Preview model will enjoy a half-price discount.

Here are the specific promotional prices (per million Tokens):

| Billing Item | Original Price | Promotional Price (per million Tokens) | Description |

| Input (cache hit) | 0.60 | 0.30 | Cheaper with a cache hit, super cost-effective for a second call |

| Input (cache miss) | 2.40 | 1.20 | The first call is also affordable |

| Output | 10.00 | 5.00 | High-speed output without a discount |

It should be noted that this promotion is only for the Kimi K2 Turbo Preview model. If you are developing an AI product that requires efficient code generation, long-text summarization, or real-time interaction, now is undoubtedly the best window to integrate and test. If you wait until September 1st when the price returns, you will miss this rare cost “dividend.”

How to Quickly Access Kimi K2 Turbo Preview?

The process to experience the extreme speed of Kimi K2 Turbo Preview is very simple. It only takes three steps:

- Log in to the Moonshot AI Open Platform and create an API Key.

- When calling the API, set the model name to

kimi-k2-turbo-preview. - Add the

max_tokensparameter to your request to unlock the ultimate speed of 100 Tokens/s.

Even a novice developer can refer to the sample code in the official documentation and get the first API call up and running in just 5 minutes. The documentation provides detailed access guidance, making your development process seamless.

Summary

This upgrade of the Kimi K2 Turbo Preview is not just a simple doubling of speed; it’s a revolutionary breakthrough in long-text processing efficiency. It will bring a qualitative leap for application scenarios that have extremely high demands for response speed, such as real-time customer service, intelligent writing assistants, and code completion tools.

The dual advantages of speed and price make the Kimi K2 Turbo Preview model the most notable focus this summer. Remember, this half-price promotion window is short, lasting only until September 1st. If you want to build your AI product faster and at a lower cost, now is the best time.