LiquidAI has officially launched Liquid Nanos, a new family of highly efficient, lightweight artificial intelligence models engineered specifically for edge computing.

These compact models are designed to operate on resource-constrained hardware—including single-board computers like the Raspberry Pi, smartphones, and laptops—without sacrificing advanced AI capabilities.

The Liquid Nanos series delivers robust performance across five core domains: translation, data extraction, Retrieval-Augmented Generation (RAG), tool calling, and mathematical reasoning. This launch marks a significant step toward powerful, localized AI that functions independently of cloud infrastructure.

What is Liquid Nanos

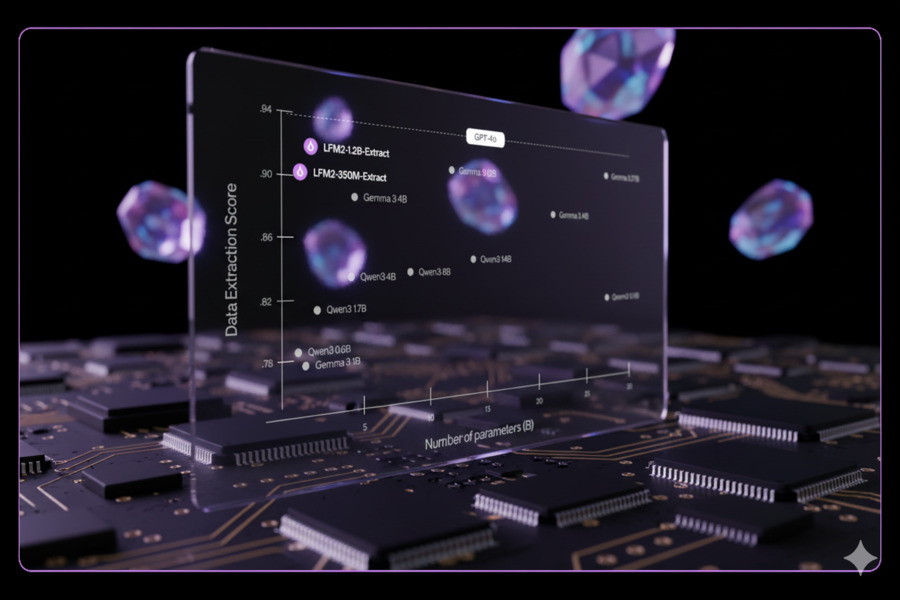

The defining breakthrough of the Liquid Nanos series lies in its revolutionary architecture, which moves beyond traditional transformer-based designs. This novel approach enables the models to achieve performance levels comparable to much larger “frontier” models like OpenAI’s GPT-4o, but within an exceptionally small computational footprint.

Available in parameter sizes from 350 million (350M) to 2.6 billion (2.6B), with initial releases focusing on the 350M and 1.2B versions, these models are a strategic solution for low-power, high-performance applications. This is not merely an efficiency improvement but a fundamental shift toward enabling sophisticated AI on devices that lack constant, high-bandwidth cloud connectivity. The entire series supports the GGUF quantization format, optimizing resource utilization and making advanced AI accessible to a broader range of developers and hardware environments.

By matching the reliability of large-scale models for specialized tasks, Liquid Nanos enable complex functionalities like high-quality translation and data extraction at a fraction of the computational and energy cost, paving the way for a new generation of intelligent edge devices.

Liquid Nanos in Action: A Suite of Task-Specific Models for Developers

To empower developers with ready-to-deploy intelligence, LiquidAI is releasing twelve specialized models within the Liquid Nanos family, now available on the Hugging Face platform. Each model is fine-tuned for a specific, high-demand workflow:

- LFM2-350M-ENJP-MT: A specialized model for high-quality, bi-directional English-Japanese translation, achieving quality competitive with GPT-4o despite being hundreds of times smaller.

- LFM2-350M-Extract & LFM2-1.2B-Extract: Optimized for pulling precise information from unstructured text (e.g., articles, emails) and structuring it into formats like JSON, XML, or YAML. The 1.2B-Extract model rivals GPT-4o on complex extraction tasks.

- LFM2-1.2B-RAG: Designed for Retrieval-Augmented Generation (RAG), this model grounds its answers in provided context documents, making it ideal for question-answering over private knowledge bases.

- LFM2-1.2B-Tool: A powerful model focused on tool calling and function execution, streamlining the development of agentic workflows.

- LFM2-350M-Math: A compact yet capable model engineered for complex mathematical reasoning.

This diverse toolkit allows developers to integrate high-performance AI into applications ranging from real-time translation services to automated data processing systems, all running locally on the device.

Final Words on Liquid Nanos

The introduction of Liquid Nanos represents a pivotal moment in edge AI development. By prioritizing efficiency, specificity, and local processing, LiquidAI is fundamentally changing how AI is deployed—shifting from a model that sends all data to the cloud to one that brings compact, powerful intelligence directly to the device.

This “cloud-free” approach unlocks immense potential, offering enhanced data privacy, minimal latency for real-time applications, and significantly reduced energy consumption compared to continuous cloud computing. As LiquidAI CEO Ramin Hasani stated, “Nanos flip the deployment model. Instead of shipping every token to a data center, we ship intelligence to the device.”

Furthermore, the ability to combine multiple specialized Nano models into composable systems presents a more efficient and scalable alternative to relying on a single, monolithic general-purpose model. As edge computing continues to evolve, the Liquid Nanos series is positioned to become an indispensable tool for developers and enterprises driving innovation across sectors from IoT and mobile computing to secure enterprise solutions, setting the stage for a new era of powerful, private, and ubiquitous on-device AI.

FAQs about Liquid Nanos

Q1: What is the main advantage of Liquid Nanos models?

A: Their key advantage is achieving GPT-4o-level performance on specific tasks in ultra-small models (350M to 2.6B parameters) that run locally on devices like phones and laptops, ensuring privacy, low latency, and no cloud dependency.

Q2: What tasks can Liquid Nanos perform?

A: They excel at specialized tasks including multilingual translation, data extraction (outputting JSON/XML), Retrieval-Augmented Generation (RAG), mathematical reasoning, and tool calling for AI agents.

Q3: How can developers use these models?

A: The models are available for download on Hugging Face and can be integrated into mobile apps via LiquidAI’s Liquid Edge AI Platform (LEAP). Liberal licensing encourages adoption by academics, indie developers, and businesses.