On August 14, 2025, the Meta AI FAIR team released Meta DINOv3, a new generation of self-supervised vision models. With its groundbreaking design and open-source commercial license, it quickly became a hot topic in the field of computer vision. The core value of this model is that it’s the first to “understand” image content without the need for manually labeled data, providing a more efficient and lower-cost solution for AI vision applications.Let’s dive in with AGIYes to uncover the details!

I. Analysis of Meta DINOv3’s Features

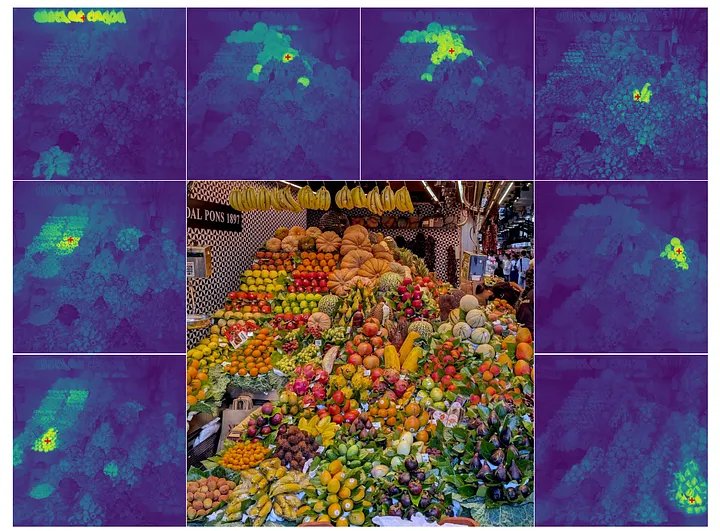

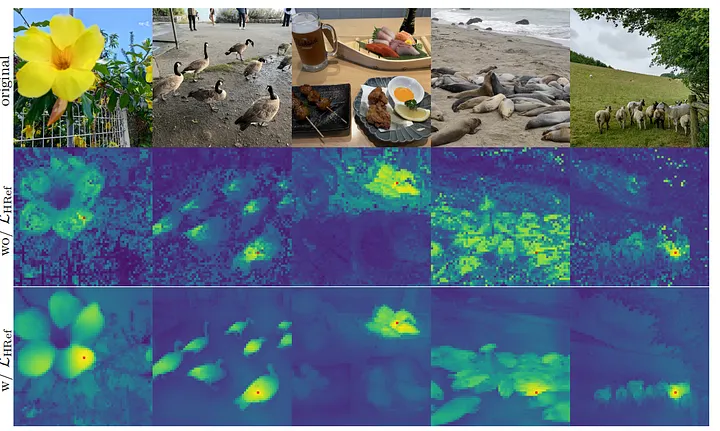

- High-Resolution Dense Feature:OutputUnlike earlier models that could only process low-resolution images, DINOv3 supports dense feature extraction at 1024×1024 pixels, which can be directly used for pixel-level tasks. Tests by NASA’s Jet Propulsion Laboratory (JPL) showed that when equipped with DINOv3, a Mars rover’s rock recognition latency was controlled to within 10 milliseconds.

- Multimodal Extension:CapabilityThe model’s built-in dino.txt text alignment module enables zero-shot association between images and text. Users can simply input “a dog wearing sunglasses,” and the system automatically matches similar image features, providing a new approach for cross-modal search.

- Full-Scenario Model Matrix:CoverageFrom a lightweight version with 21M parameters (suitable for mobile devices) to a cloud-based version with 7.1B parameters, DINOv3 offers five pretrained models. Notably, Meta also synchronized the open-sourcing of the CNN-based ConvNeXt series for the first time, meeting the needs of traditional computer vision developers.

Meta DINOv3 performance benchamrks

II. Real-World Application Cases of Meta DINOv3

- Environmental Monitoring: The World Resources Institute (WRI) leveraged DINOv3’s ViT-L/16 model to conduct large-scale forest monitoring in Kenya. Traditional satellite-based tree height measurements often suffered from significant errors due to canopy density variations and limited ground truth data. By applying DINOv3’s self-supervised feature extraction, WRI achieved unprecedented precision—reducing average error from 4.1 meters to just 1.2 meters, a 70% accuracy boost. This breakthrough enables more reliable carbon sequestration estimates and deforestation tracking, critical for climate policy decisions.

- Industrial Quality Inspection: Tesla’s Berlin Gigafactory tested DINOv3 in a battery production line quality control pilot. Unlike conventional systems requiring thousands of labeled defect images, DINOv3 analyzed raw camera feeds with zero labeled data, matching the defect detection performance of supervised models. The factory reported a 30% faster deployment cycle and eliminated manual annotation costs. Engineers highlight its adaptability—when production shifted to a new battery design, DINOv3 required only minor adapter adjustments versus full retraining.

- Medical Imaging: In collaboration with Mayo Clinic, researchers applied DINOv3 to mammogram analysis, where labeled datasets are scarce due to privacy and expert annotation costs. The solution combined DINOv3’s frozen backbone with a lightweight diagnostic adapter, slashing labeled data requirements by 90% while maintaining diagnostic accuracy. Dr. Sarah Thompson, lead radiologist on the project, noted: “This drastically lowers barriers for hospitals in resource-limited settings.” Early trials in Brazil and India show promise for expanding breast cancer screening access.

III. How Developers Can Quickly Get Started with Meta DINOv3

Meta provides a streamlined Hugging Face integration solution, allowing for feature extraction with just 3 lines of code:

Python:

from transformers import pipeline

extract = pipeline("image-feature-extraction", model="facebook/dinov3-vitl16-pretrain-lvd1689m")

features = extract("medical_scan.png") # Outputs a 1024-dimensional feature vector

For enterprise users, Meta recommends prioritizing the ViT-B/16 version—it balances performance and cost, taking only 25 milliseconds to process a 1024×1024 image on a single A100 GPU.

Summary and Outlook

The release of Meta DINOv3 marks a new milestone for the field of computer vision. It fuses three key elements—”unlabeled, high-resolution, and general-purpose visual backbone”—to an unprecedented level.

For the research field, DINOv3 opens new doors for domains where data labeling is difficult, such as medical image analysis, remote sensing, and AR/VR. Researchers can focus on the algorithms themselves without spending a lot of effort on data preparation.

For the industry, companies can leverage its commercially friendly license to directly deploy and apply Meta DINOv3, saving on expensive data labeling and model fine-tuning costs, and greatly accelerating product development and time-to-market.

For the entire community, the full open-sourcing and commercial license of Meta DINOv3 will surely accelerate the explosion of next-generation AI vision applications, injecting new vitality into the entire ecosystem.

So, how do you think Meta DINOv3 will change your industry or research direction?