The AI landscape is witnessing a significant shift with the release of Moondream3.0. This latest preview model, built on a sophisticated Mixture-of-Experts (MoE) architecture, is making waves by reportedly surpassing established industry leaders like GPT-5, Gemini, and Claude 4 on several key benchmarks. What makes this achievement particularly remarkable is its efficient design, which challenges the prevailing “bigger is better” paradigm in artificial intelligence.

Moondream3.0 boasts a total of 9 billion parameters but activates only 2 billion during inference. This lightweight approach allows it to maintain high deployment efficiency and inference speed comparable to its predecessor, Moondream2, while delivering what developers claim is a genuine technological leap in performance, especially in complex visual reasoning scenarios.

Core Architecture of Moondream3.0

At the heart of Moondream3.0’s efficiency is its innovative MoE architecture. While the model encompasses 9 billion parameters (9B), it strategically utilizes only 2 billion (2B) for any given task. This streamlined process ensures computational efficiency without sacrificing power.

The model integrates a SigLIP visual encoder, supporting multi-crop channel concatenation. This allows for token-efficient, high-resolution image processing. It operates with a hidden dimension of 2048 and employs a custom, efficient SuperBPE tokenizer. A significant enhancement comes from its multi-head attention mechanism, combined with advanced position and data-dependent temperature scaling, which collectively boost its long-context modeling capabilities.

Notably, this architecture is built upon an “upsampled” initialization from Moondream2. The training process utilized approximately 450 billion tokens—a dataset size notably smaller than the trillion-token datasets typically used to train top-tier models. Despite this, initial benchmarks indicate uncompromised performance. For developers, accessibility is a key feature; the model is available for download via Hugging Face and supports both cloud API and local execution. Current hardware requirements include an NVIDIA GPU with at least 24GB of memory, with quantized versions and support for Apple Silicon expected in the near future.

Key Features of Moondream3.0

Moondream3.0 is engineered for practical, high-stakes applications. Its design natively supports a 32K context length, making it highly suitable for real-time interaction and complex, multi-step agent workflows. The integration of the SigLIP visual encoder is pivotal for its advanced high-resolution image analysis.

The model’s “all-around” visual capabilities are a major differentiator. These include:

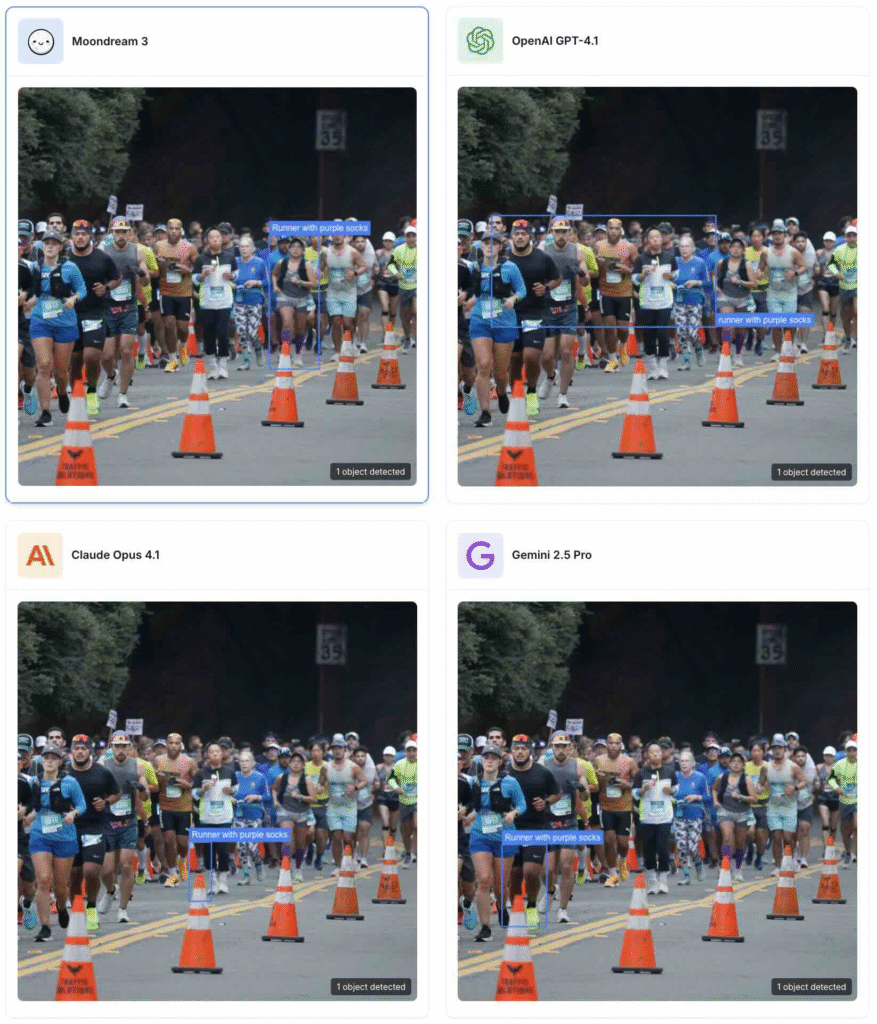

- Open-vocabulary object detection: Identifying objects beyond a pre-defined list.

- Point-and-click functionality and counting: Interacting with and quantifying elements within an image.

- Advanced caption generation and OCR: Creating detailed descriptions and accurately transcribing text from images.

- Structured Output: A critical feature for developers, Moondream3.0 can directly generate structured data like JSON arrays. For instance, it can analyze an image of a pet and output specific details such as the dog’s ID, coat color, and harness color in a ready-to-use format.

Community evaluations also highlight its profound performance in specialized tasks such as user interface (UI) understanding, document transcription, and precise object localization. This combination of features sets a new standard for what efficient visual language models (VLMs) can achieve.

Moondream3.0 Application

As an open-source model, Moondream3.0 embodies a philosophy of accessibility: “no training, no ground truth data, no heavy infrastructure.” This allows developers to tap into its powerful visual understanding capabilities primarily through sophisticated prompt engineering, dramatically lowering the barrier to entry for state-of-the-art AI.

Early adopters have already reported successful deployments across a diverse range of domains. These include programming semantic behavior for robotics and running the model on resource-constrained devices like mobile phones and Raspberry Pi units, underscoring its potential for edge computing applications.

Preliminary comparisons suggest that Moondream3.0 holds a distinct advantage in visual reasoning and structured output generation when measured against other prominent open-weight VLMs. The development team has committed to continuous iteration, with plans to optimize the inference code and further improve its benchmark scores in future updates, ensuring the model remains competitive.

Conclusion on Moondream3.0

Moondream3.0 emerges as a transformative force in the open-source AI community. Its ability to challenge and, in some cases, outperform much larger proprietary models on key visual benchmarks signals a shift towards more efficient and accessible AI development.

By combining a highly efficient MoE architecture with a powerful set of visual reasoning tools and a strong commitment to open-source principles, Moondream3.0 is positioned as a critical enabler for the future of computer vision and multi-modal AI. It offers developers a viable path to integrate cutting-edge visual intelligence without the prohibitive computational costs typically associated with this level of performance.

For developers and researchers, the release of Moondream3.0 prompts a closer examination of its capabilities and a reevaluation of what is possible with leaner, more focused AI models.