Google’s latest Gemini 2.5 Pro has entered into a strategic partnership with the CAMEL-AI OWL system from the open-source CAMEL-AI framework. This collaboration integrates cutting-edge large language model inference capabilities with multi-agent collaboration technology, bringing an efficiency revolution to the field of data analysis.Together, they automate complex workflows: Gemini handles high-level analysis while OWL orchestrates agents for crawling, cleaning, and visualization, boosting efficiency 4-7x. Deployable via Docker/Gradio, the system outputs Jupyter notebooks, interactive charts, and clean datasets, marking a leap toward conversational data science.Today, AGIYes will take you to explore the truth.

Why Choose Gemini 2.5 Pro and the OWL System?

1、Three Core Advantages of Gemini 2.5 Pro

As Google’s most advanced AI model, Gemini 2.5 Pro has achieved several technological breakthroughs. Its most notable feature is the support for an ultra-long context window of up to 1 million tokens (soon to be upgraded to 2 million), allowing it to process entire academic monographs or large codebases in one go. This addresses the “short memory” issue of traditional models.

According to Google’s official benchmark tests, the model outperforms mainstream competitors such as GPT-4 Turbo and Claude 3 Opus in tasks like mathematical reasoning (MATH benchmark), code generation (HumanEval), and scientific Q&A (MMLU). For example, in the HumanEval benchmark test, the one-time pass rate for Python code increased from 71.3% to 87.6% (compared to Gemini 1.5 Pro), especially excelling in generating complex logic Python scripts.

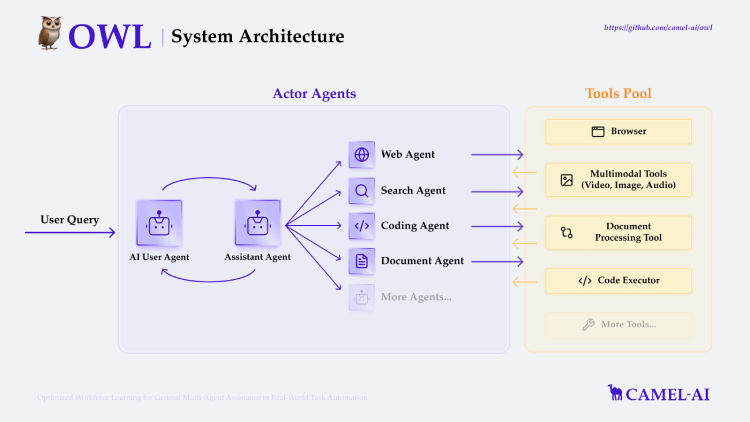

2、OWL System: The “Central Nervous System” of Intelligent Collaboration

If Gemini 2.5 Pro is the “brain,” then the OWL system from CAMEL-AI acts as the “nervous system.” This open-source framework breaks down complex tasks into subtasks that can be executed in parallel through a dynamically divided network of agents.

Its innovations are reflected in three aspects:

- Real-time Response Capability: Integration with search engines like DuckDuckGo and Google allows for dynamic data acquisition during analysis.

- Multimodal Processing: It supports parsing structured information from PDFs, Excel tables, and even videos simultaneously.

- Secure Execution Environment: Browser automation operations are realized through Playwright, and generated code is run in a sandbox to avoid security risks.

In the GAIA v1 benchmark test (Q1 2024 version), OWL achieved an average score of 69.7 points, ranking first among open-source frameworks and proving its industrial-level practicality.

3、Synergistic Effects of 1+1>2

The combination of the two creates a unique value loop: Gemini 2.5 Pro handles high-difficulty reasoning and content generation, while the OWL system schedules various toolchains for execution. For example, when a user requests “analyze the market trend of new energy vehicles and generate a visual report,” Gemini designs the analysis framework and chart types, while OWL’s crawler agent automatically collects industry data, the code agent writes a Pandas processing script, and the visualization agent outputs an interactive dashboard. Tests on the CAMEL-AI standard task set show a 4-7 times efficiency improvement.

How Gemini 2.5 Pro with CAMEL-AI Collaboration Enables Automated Data Analysis

1、Rapid Deployment of the OWL Framework

The first step to achieving automated analysis is to set up the runtime environment. OWL is designed to be developer-friendly, and you can complete the basic deployment by executing the following commands in the terminal:

git clone https://github.com/camel-ai/owl.git

cd owl

uv pip install -e . # Use the new-generation uv tool to speed up installationIt is recommended to use uv instead of traditional pip, which has been tested to reduce dependency installation time by 40%. The system also supports deployment with conda and Docker. Windows users can run it directly through PowerShell without additional configuration.

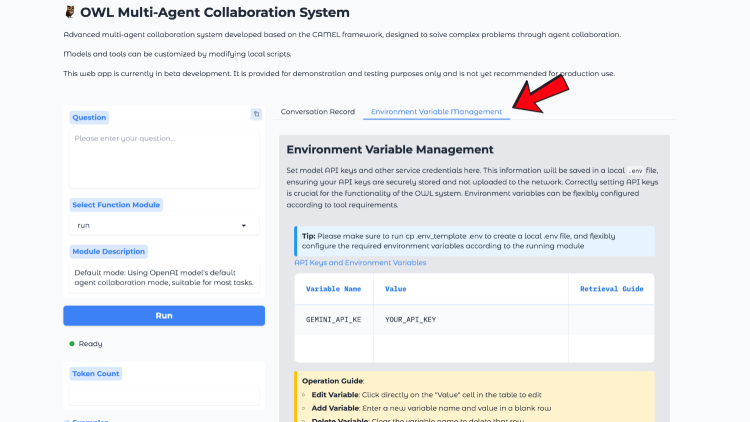

2、Intelligent Environment Configuration

After cloning, you need to choose an environment configuration scheme based on the usage scenario:

- Local Development Mode: Create a Python 3.10 virtual environment and install 37 core dependencies, including Playwright and PyPDF2.

- Production Environment Deployment: It is recommended to use the Docker image (camelai/owl:latest), which comes pre-installed with CUDA acceleration components.

- Cloud Experimentation: Direct deployment on Google Colab Pro’s T4 instance is supported.

The key step is to configure the API key for Gemini 2.5 Pro. Create a .env file in the project root directory and add:

GEMINI_API_KEY=your_actual_key_hereThe system will automatically read this key and establish a secure connection.

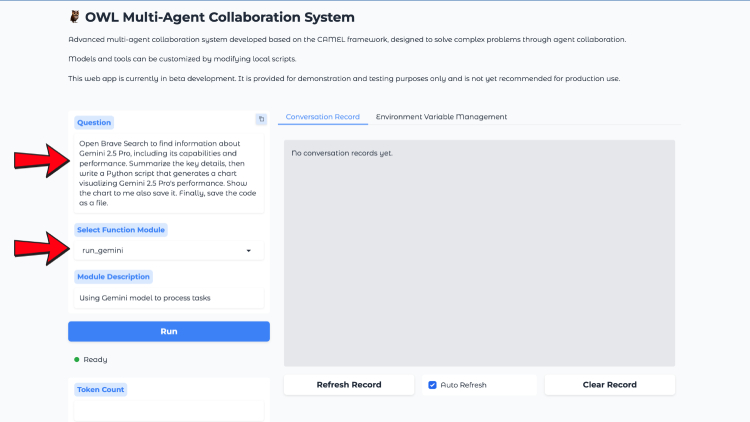

3、Launching the Interactive Operation Interface

The Web UI built with Gradio greatly lowers the usage barrier. Run:

python owl/webapp.pyAccess the local port 7860 to see a three-column interface:

- Left Control Panel: Model selection (Gemini 2.5 Pro/others), toolkit loading

- Central Workspace: A natural language input box that supports 3000-character long prompts

- Right Monitoring Area: Real-time display of agent division of labor and task progress

4、Task Definition and Execution

Try the following typical commands in the input box:

“Analyze the global AI funding data for 2023, scrape at least 500 records from Crunchbase, classify and sum up the funding amounts by industry, display the flow of funds with a Sankey diagram, and save a reproducible Jupyter Notebook.”

The system will trigger the following automated process:

- The crawler agent launches a headless browser to collect data.

- The cleaning agent automatically processes missing and abnormal data.

- The analysis agent calls Gemini to generate Pandas processing code.

- The visualization agent selects the optimal chart type (automatically avoiding inefficient forms like pie charts).

- The documentation agent packages all output files.

5、Real-time Monitoring and Intervention

During task execution, users can:

- Progress Matrix: View the CPU/memory usage of each agent.

- Execution Logs: Real-time display of generated Python code.

- Emergency Brake: Pause tasks that may fall into loops at any time.

For complex tasks, it is recommended to enable the “stage-by-stage confirmation” mode, where the system requests user confirmation at key points (such as before network requests and file writes).

6、Result Delivery and Reuse

The typical output after completion includes:

analysis_report.ipynb: A Jupyter Notebook containing complete code and Markdown explanations.dataset_clean.csv: Standardized processed data.visualization.html: An interactive chart (supporting Plotly/D3.js).pipeline_flowchart.pdf: A task decomposition flowchart.

All files are automatically saved in the /output/timestamp directory and can be exported as a ZIP file with one click. Advanced users can directly call OWL’s REST API to integrate with existing corporate systems.

Practical Tips: When running long-term tasks, adding the

--checkpoint=5parameter will automatically save progress every 5 minutes. In case of unexpected interruptions, you can resume from the last checkpoint. For data sources that require permission verification, configure OAuth tokens in advance in the/config/credentials.jsonfile to achieve automatic login.

This collaboration marks the entry of data analysis into a new stage of “conversational automation.” According to the CAMEL-AI team, the next steps will focus on optimizing three areas:

- Cross-platform Integration: Direct connection to productivity tools such as Notion and Power BI.

- Domain Specialization: Training customized agents for vertical fields like biomedicine and quantitative finance.

- Real-time Collaboration: Allowing multiple users to edit and annotate analysis processes simultaneously.

Gemini 2.5 Pro with CAMEL-AI Collaboration, ushering in a new era of automated data analysis.As Demis Hassabis, head of Google DeepMind, said, “When AI can understand context like human experts and actively collaborate, the boundaries of knowledge work will be redefined.” For analysts, researchers, and developers, mastering this toolchain means handing over repetitive tasks to AI and focusing instead on more creative decision-making and innovation.