Meta AI has just made a major move in the race for efficient on-device AI, officially releasing MobileLLM-R1, a new suite of lightweight large language models (LLMs).

Now publicly available on Hugging Face, these models are expertly engineered to deliver heavyweight reasoning capabilities while operating with exceptional efficiency on resource-constrained edge devices like smartphones.

Keep reading, here is everything you need to know about Meta’s MobileLLM-R1.

What is MobileLLM-R1: Architecture and Efficiency

The superior performance of the MobileLLM-R1 series stems from a host of architectural innovations meticulously designed for optimal speed and computational efficiency at the edge.

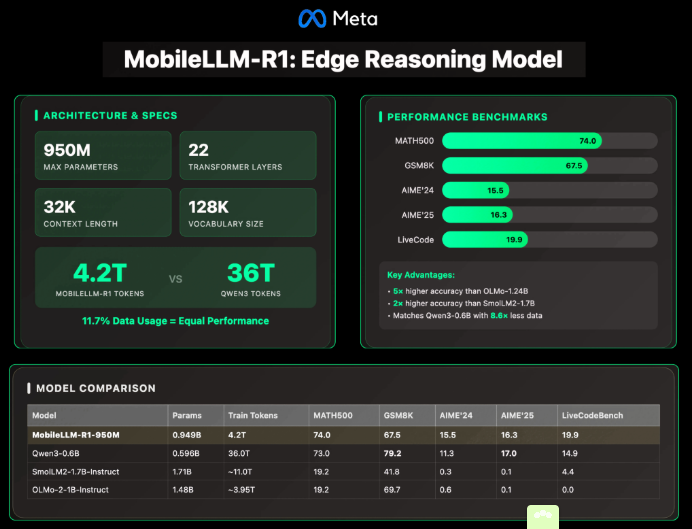

The flagship model, MobileLLM-R1-950M, features a robust 22-layer Transformer structure. This structure utilizes 24 attention heads alongside 6 Grouped Key-Value (GKV) heads, with an embedding dimension of 1536 and a hidden layer dimension of 6144.

Several key optimizations were integrated to squeeze maximum performance out of minimum resources:

- Grouped-Query Attention (GQA): This critical feature is implemented to dramatically reduce the computational and memory demands during the inference stage. By sharing Key and Value projections across multiple query heads, GQA significantly boosts speed without a noticeable drop in accuracy, a necessity for fast, on-device execution.

- Block-Level Weight Sharing: An innovative technique used to successfully lower the total parameter count of the model. This reduction is achieved without introducing a substantial increase in latency, making the model simultaneously smaller and highly responsive.

- SwiGLU Activation Function: Chosen for its proven ability to enhance the representational capacity of smaller models. This activation function helps the MobileLLM-R1 capture more complex patterns from its training data, allowing it to punch above its weight class.

The context handling capabilities are also notable. The base model supports a standard 4K context length. A post-training version, however, extends this to a substantial 32K context length. This extended context is crucial, providing the model with the necessary “working memory” to tackle complex, multi-step tasks in coding and science—for instance, analyzing lengthy code repositories, debugging entire functions, or processing comprehensive research abstracts simultaneously.

MobileLLM-R1‘s Training Efficiency

Perhaps the most astonishing aspect of MobileLLM-R1 is its training efficiency. The model was trained on approximately 4.2 trillion tokens in total. To highlight the efficiency gains, consider a competing model like Qwen3 0.6B, which was trained on 36 trillion tokens.

Astonishingly, MobileLLM-R1 managed to achieve equivalent or even superior accuracy to Qwen3 while utilizing only about 11.7% of the data.

This substantial reduction in data consumption is a game-changer. It not only leads to a massive decrease in overall training cost but also drastically minimizes the required computational resources and carbon footprint. This makes MobileLLM-R1 a significantly more sustainable and accessible option for organizations looking to deploy advanced AI models.

Following its pre-training, the model underwent rigorous Supervised Fine-Tuning (SFT) on specialized datasets focused on mathematics, coding, and general reasoning to further hone its specialized performance.

MobileLLM-R1 Performance in Benchmarks

The proof of any model’s architecture is its performance in industry-standard benchmarks. The results for the MobileLLM-R1-950M model validate Meta AI‘s hyper-focused design philosophy, demonstrating that specialized training and innovative architecture can indeed outclass models with a greater parameter count.

In the critical area of quantitative and scientific analysis, MobileLLM-R1 sets a new benchmark for lightweight models:

- MATH500 Dataset: On this challenging mathematics reasoning dataset, MobileLLM-R1-950M achieved an accuracy that was approximately five times higher than the competing OLMo-1.24B and two times higher than SmolLM2-1.7B. This decisive dominance solidifies its position as a superior mathematical reasoning engine for its size.

- GSM8K, AIME, and LiveCodeBench: Across these key benchmarks—which measure multi-step reasoning, mathematical problem-solving, and code generation—MobileLLM-R1-950M was able to match or even surpass the performance of Qwen3-0.6B. This is remarkable considering the massive difference in their training token counts.

These benchmark successes unequivocally highlight the model’s ability to deliver heavyweight performance from a lightweight package. MobileLLM-R1 is fundamentally redefining expectations for what’s possible with models under the one-billion-parameter threshold, particularly for tasks that require deep logical inference.

Who is MobileLLM-R1 for?

MobileLLM-R1 is primarily designed for developers, researchers, and enterprises focused on specialized, on-device applications. Target use cases include:

- Smartphones and Consumer Devices: Deploying high-accuracy code completion or mathematical solvers directly within mobile applications, minimizing reliance on cloud APIs.

- Industrial and Scientific Edge: Embedded systems in factories, laboratories, or field instruments requiring real-time, accurate calculations, data analysis, or code generation without internet connectivity.

- Educational Tools: Personalized learning apps that can generate, evaluate, or explain complex mathematical and coding problems locally and instantly.

Conclusion on MobileLLM-R1

Meta AI’s release of the MobileLLM-R1 series marks a watershed moment in the development of efficient large language models. It is a clear and compelling signal that the industry is moving toward a future dominated by smaller, highly specialized models capable of delivering competitive, complex reasoning power without the staggering training budgets and computational resources demanded by their massive predecessors.

MobileLLM-R1 is setting a powerful new standard for edge LLM deployment. By combining a resource-efficient training methodology with innovative architecture, it demonstrates that advanced AI capabilities—particularly in mathematics, coding, and scientific reasoning—can be made more widely accessible and sustainable.

Read More: Google DeepMind Releases VaultGemma