DeepSeek has officially launched its DeepSeek-V3.1-Terminus model, a significant upgrade now available on its official API platform. The company followed this initial release with a major announcement: the new model will also be open-sourced, reinforcing DeepSeek’s commitment to the broader AI research community.

This release positions DeepSeek-V3.1-Terminus as a more robust and refined successor to its predecessor, with a focus on stability and enhanced agentic performance. The model introduces two operational modes—thinking and non-thinking—both supporting 128K context length, providing flexibility for different application scenarios.

What is DeepSeek-V3.1-Terminus?

The DeepSeek-V3.1-Terminus model represents the latest iteration in DeepSeek’s series of large language models, designed to be a more stable and powerful tool for developers and researchers. According to official documentation, the new model builds on the strong foundation of DeepSeek-V3.1 while addressing critical issues that emerged after its initial launch.

Key improvements include resolving occasional character anomalies and linguistic inconsistencies, particularly reducing Chinese and English mix-ups and eliminating random characters that affected previous versions. The name “Terminus,” derived from Latin meaning “end” or “boundary,” suggests this version may represent the culmination of the V3 architecture series, signaling technological maturity.

The model maintains a 671B parameter scale with 37B active parameters, utilizing FP8 micro-scaling technology for efficient inference. This technical foundation enables the model to handle enterprise-level long documents and complex workflows effectively.

Related Reading: DeepSeek Launches V3.1

DeepSeek-V3.1-Terminus Performance

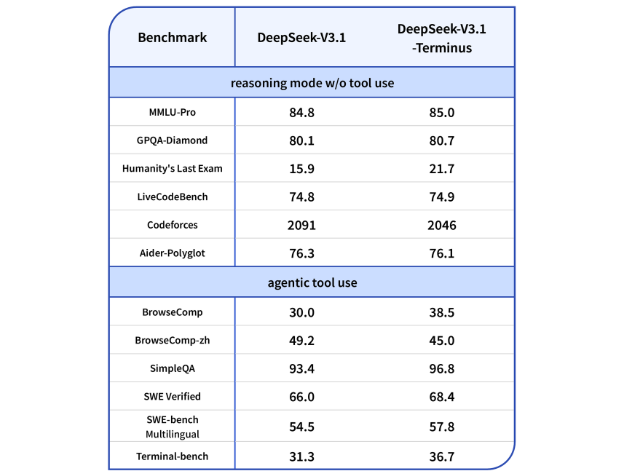

DeepSeek has published benchmark data showcasing the new model’s performance gains across various tests. In non-agentic evaluations, DeepSeek-V3.1-Terminus demonstrated improvements ranging from 0.2% to a remarkable 36.5% compared to the previous version.

The most significant gains were seen in the HLE (Human Ultimate Test) benchmark, which challenges models on expert-level, high-difficulty knowledge, multi-modality, and complex deep reasoning tasks. This substantial improvement highlights the model’s enhanced capabilities for tackling intricate, real-world problems.

In agentic capabilities, the model shows marked enhancement in key areas. Official tests indicate improved performance in BrowseComp (from 30.0 to 38.5) and Terminal-bench (from 31.3 to 36.7) benchmarks, demonstrating superior web browsing and terminal operation capabilities. These optimizations make DeepSeek-V3.1-Terminus a more effective choice for developers creating AI-powered agents that require interaction with external tools and environments.

Two Modes: Chat and Reasoner

DeepSeek-V3.1-Terminus is offered in two distinct operational modes to cater to different use cases:

1. deepseek-chat (Non-thinking Mode)

This mode is designed for interactive and tool-integrated applications. It comes equipped with support for key features like function calling, which allows the model to interact with external tools and APIs, as well as FIM completion and JSON output. This makes it ideal for building conversational agents, automated scripts, and applications requiring structured data exchange. The non-thinking model defaults to 4K tokens output, expandable to 8K tokens, suitable for fast-response conventional interaction scenarios.

2. deepseek-reasoner (Thinking Mode)

This mode is engineered for profound, multi-step reasoning tasks. It focuses on generating extensive and coherent logical outputs, optimized for complex reasoning tasks, code generation, or long-form content creation. The thinking model defaults to 32K tokens output, maximum supporting 64K tokens, catering to scenarios requiring deep logical analysis.

Both versions support a context length of 128,000 tokens, allowing for the processing of extensive documents, lengthy research papers, or substantial codebases in single interactions.

Pricing and Accessibility

DeepSeek has implemented a transparent pricing strategy aimed at providing cost-effective AI services for developers and enterprise users. The model input costs vary based on cache hit situations: 0.5 RMB per million tokens when cache is hit, and 4 RMB when not hit. Output costs are unified at 12 RMB per million tokens.

This pricing structure offers significant cost advantages compared to alternatives like OpenAI’s GPT-4, which charges approximately $10 (78 HKD) per million tokens, while DeepSeek maintains a competitive price of about $1.68 (13.1 HKD) per million tokens.

Accessing the newly released model is straightforward. DeepSeek-V3.1-Terminus has been fully integrated and is now available across multiple official platforms, including the DeepSeek official app, web interface, mini-program, and API. The open-source version is also available on Hugging Face and ModelScope, allowing researchers and developers to download and use the model freely.

Conclusion on DeepSeek-V3.1-Terminus

The release of DeepSeek-V3.1-Terminus represents DeepSeek’s strategy to iterate and refine its products based on community feedback. While many improvements are incremental, they collectively represent a significant step forward in model stability and performance.

The enhanced agentic capabilities and dual-mode functionality will undoubtedly benefit developers and researchers seeking a reliable, stable, and tool-integrated large language model. The resolution of critical bugs like random character output and language mixing issues makes this version particularly suited for production environments where reliability is essential.