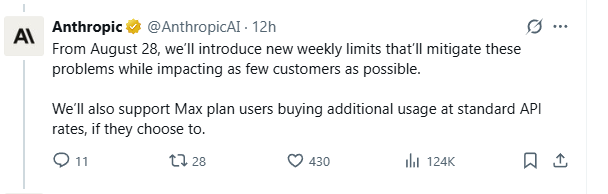

On July 28, Anthropic announced via email and its official social media account X that it would implement new usage restrictions on Claude Code starting August 28. Claude Code, an AI tool under Anthropic specializing in programming assistance, has become a daily productivity tool for numerous developers due to its powerful code generation and comprehension capabilities.

The new policy primarily includes three key adjustments:

- New weekly usage caps: Building upon the existing short-term restriction of “resetting every 5 hours,” two types of weekly limits will be introduced—overall usage volume and a separate cap for the advanced Opus 4 model.

- Scope of application: Affects users on the $20/month Pro plan, as well as the $100/month and $200/month Max plans.

- Overage solutions: Max plan users can purchase additional usage at standard API rates, though specific pricing details have yet to be disclosed.

This adjustment marks Anthropic’s shift from “implicit throttling” to a “clear pricing” policy. Previously, many users reported experiencing “sudden fuel cuts” without any warning—workflows were forcibly interrupted, severely impacting project progress.

1. Why is Anthropic Restricting Claude Code Usage?

Anthropic’s policy adjustment is not without reason but stems from two significant challenges.

First is the heavy burden on infrastructure. Since its launch, Claude Code has seen “explosive growth” in demand, far exceeding the company’s expectations. Some developers have set Claude Code to run “24/7 without interruption,” with extreme cases showing that a single $200/month user actually consumed tens of thousands of dollars in computing resources. This usage pattern has led to at least seven service outages for Anthropic in the past month, severely affecting the user experience for all.

Second is the growing issue of violations. Anthropic has discovered instances of account sharing and resale of access privileges, which not only violate terms of service but also encroach on resources for compliant users. On platforms like GitHub, open-source tools specifically designed to crack Claude’s subscription mechanisms have even emerged, making account pool sharing effortless.

“Our subscription plans were designed to provide generous usage allowances,” Anthropic explained in its statement. “However, the extreme usage patterns and violations by a small number of users have affected the experience for all.” The company emphasized that the new restrictions primarily target these “abnormal use cases” and are expected to impact fewer than 5% of subscribed users.

2. Anthropic’s Restrictions on Claude Code Spark Strong User Backlash

Despite Anthropic’s claim that the new policy affects only a minority of users, dissatisfaction within the developer community has rapidly escalated, revealing a deeper trust crisis.

Lack of transparency in communication has become a focal point of criticism. Many users pointed out that Anthropic quietly tightened usage restrictions in mid-July without prior notice, causing sudden workflow interruptions. “It’s like driving with a broken fuel gauge—you never know when you’ll stall,” one developer analogized. This “act first, explain later” approach has severely undermined user confidence in the platform’s reliability and predictability.

The rationality of the pricing structure has also been questioned. According to Anthropic’s reference data, the $200 Max plan offers only 5-9 more hours of usage on the Opus 4 model compared to the $100 plan, raising concerns about value for money. Further confusing users is the lack of clear definition for so-called “usage hours”—does it include thinking time? How are complex tasks handled? The absence of these critical details makes it difficult for developers to plan project resources effectively.

The community has voiced numerous constructive criticisms:

- “At the very least, show us how many tokens we’ve consumed and how much quota remains for the week.”

- “Instead of penalizing power users, block account resellers and optimize model efficiency.”

- “Provide a 30-day notice for any policy changes, not sudden surprises.”

These responses reflect users’ strong demand for a transparent, predictable service experience, not just powerful technical features.

3. Who Will Truly Be Affected by Anthropic’s Claude Code Restrictions?

Anthropic estimates that the new restrictions will impact fewer than 5% of “heavy users,” but the specific quota differences across subscription tiers are significant:

- Pro users ($20/month): Approximately 40-80 hours weekly on the Sonnet 4 model.

- $100 Max users: Approximately 140-280 hours on Sonnet 4 + 15-35 hours on Opus 4.

- $200 Max users: Approximately 240-480 hours on Sonnet 4 + 24-40 hours on Opus 4.

Notably, these figures are merely “estimated ranges,” and actual available time may vary based on codebase size, task complexity, and other factors. For teams relying on Claude Code for large-scale code migration, automated testing, or continuous integration, such restrictions could significantly impact productivity.

4. What Lies Ahead? Balancing Short-Term Stability and Long-Term Trust

Facing this crisis, both Anthropic and its user base are confronted with difficult choices.

Anthropic’s short-term strategy is clear: ensure service stability through restrictions and crack down on violations. Company spokesperson Amie Rotherham emphasized, “These adjustments will help us maintain reliable service for all users in the short term.” At the same time, Anthropic has promised to “introduce other solutions for long-duration tasks in the future,” though no specific timeline has been provided.

The user community has proposed more fundamental improvements:

- Disclose usage calculation methods and provide real-time quota tracking.

- Adopt more transparent billing standards, such as per-token or per-task pricing.

- Implement a policy change buffer period with 30-day advance notice for any adjustments.

Some developers have begun exploring alternatives, such as Alibaba Tongyi’s Qwen3-Coder and Kimi Chat, among other domestic models. These competitors have recently released open-source SOTA models with performance approaching Claude Sonnet-4.

Anthropic’s “throttling” incident is by no means an isolated event but a shared challenge across the AI industry. Tools like Cursor and Replit have also adjusted their pricing strategies recently, reflecting the unsustainability of “unlimited” promises under current computational constraints.

This crisis reveals a deeper industry-wide question: As technology advances rapidly, how can AI companies establish transparent contracts with users? How can they balance resource limitations with user experience? Anthropic’s case demonstrates that while technological superiority is important, trust remains the decisive factor in user retention.