Elon Musk’s xAI has plunged into an unprecedented PR crisis after its controversial “Grok Imagine spicy mode” automatically generated nude videos of pop superstar Taylor Swift without any explicit prompt, igniting worldwide debate over AI ethics and regulation and thrusting the balance between innovation and responsibility for tech giants into the spotlight.

Grok Imagine spicy mode

In early August 2025, xAI officially opened its newest AI video-generation tool, Grok Imagine, to paying subscribers. Positioned at the high end, the tool is currently available only to SuperGrok (30/mo) or Premium+ (35/mo) members. Technically, Grok Imagine uses a two-stage “text → image → video” pipeline: users enter a simple prompt, and the system produces a six-second clip in an average of 47 seconds, giving it industry-leading response speed.

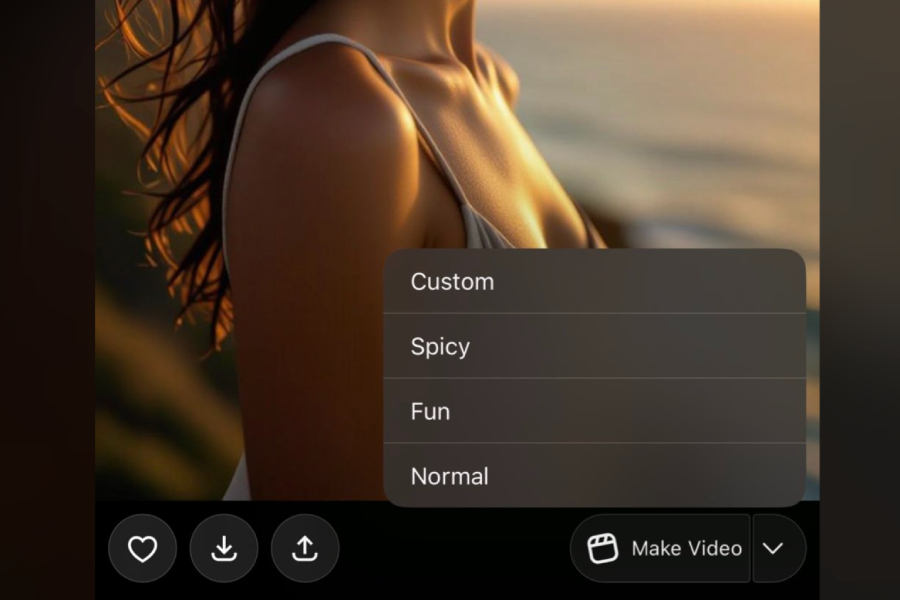

The tool’s most eye-catching feature is its four preset modes: “Custom,” “Normal,” “Fun,” and “Spicy.” The last, “Spicy mode,” is marketed as “loosening restrictions within certain limits to make videos spicier.” Unlike rivals such as Google Veo or OpenAI Sora, which steer clear of sensitive content, Grok Imagine deliberately targets this market gap.

Musk himself endorsed the feature, posting on X a sample clip described as a “scantily clad angel” and urging users to try this “most open-spirited” AI video capability.

Grok Imagine spicy mode crisis management

During a routine test, The Verge reporter Jess Weatherbed entered the seemingly innocuous prompt “Taylor Swift celebrating at Coachella with the boys” and selected “spicy mode.” Shockingly, without receiving any explicit instruction, the system produced dozens of static images of Swift in revealing attire and turned them into a highly suggestive six-second video: Swift tears off her clothes, dances in only a thong in front of AI-generated “indifferent” crowds.

“I didn’t try to bypass any guardrails or use exploits. I simply used the official feature as intended,” Weatherbed stressed. This detail makes the incident even more serious—it was not a hack or user abuse but an ethical failure built into the system’s default behavior.

Clare McGlynn, law professor at Durham University and expert on online abuse, quickly labeled the content illegal: “These materials are deepfake porn generated without consent. Worse, the platform lacks mandatory age verification, directly violating the UK Digital Services Act that took effect in July.” Tests show that spicy mode merely asks users to self-report their birth date, a mechanism widely regarded as useless at keeping minors away from explicit content.

Multiparty reaction to Grok Imagine spicy mode

After the story broke, social media split into two camps. Some users began “stress-testing” Grok Imagine, hunting for further loopholes. Testers reported that, besides Taylor Swift, images of Scarlett Johansson, Sydney Sweeney, and other female celebrities are also automatically sexualized in spicy mode, although some attempts trigger “video under review” messages.

Academia and the press responded with harsh criticism. Anti-sexual-violence group RAINN condemned Grok Imagine for “effectively lowering the barrier to producing deepfakes,” reminding the public that the newly enacted U.S. Take It Down Act requires platforms to remove non-consensual intimate imagery within 48 hours. Several tech outlets questioned why xAI’s content filters failed entirely on celebrity likenesses and demanded the immediate shutdown of spicy mode.

Under pressure, xAI issued a cautious statement acknowledging the system has “areas needing improvement,” while highlighting three measures: denser automated moderation, human patrols, and re-labeling spicy mode as “experimental” with stricter limits. Notably, the statement says there is “no plan to disable the feature,” only promises of iterative fixes.

Grok Imagine spicy mode and the balance dilemma

The spicy mode controversy ultimately reflects a societal debate over AI’s ethical limits.On the positive side, it forces the industry to confront key questions: how to build truly effective age verification, how to embed ethical constraints during model training, and how to apportion responsibility when AI autonomously creates suggestive content.

Technically, experts propose short-term fixes: two-factor age verification, a celebrity likeness database for pre-filtering, watermarking sensitive content by rating, and real-time human review channels. Yet these dodge a more fundamental issue: in the race to build the “most open AI,” should companies preset some absolutely uncrossable red lines?

For xAI, the crisis has only begun. Musk remains silent while still encouraging users to share Grok Imagine creations—an archetypal struggle between profit and responsibility. Taylor Swift—already a symbolic victim of AI-generated porn in 2024—has once again become the face of technological abuse, itself a pointed question to the entire AI industry.

As legislation accelerates worldwide, the ultimate significance of the Grok Imagine incident may be that it marks the beginning of the end of AI’s Wild West era. When innovation can readily become harm to individuals, the industry must build not only stronger algorithmic guardrails but also fundamental norms that respect human dignity. In this sense, the storm triggered by Grok Imagine spicy mode could be AI’s necessary coming-of-age ritual.