Google has officially launched EmbeddingGemma, a groundbreaking open-source text embedding model engineered specifically for on-device applications.

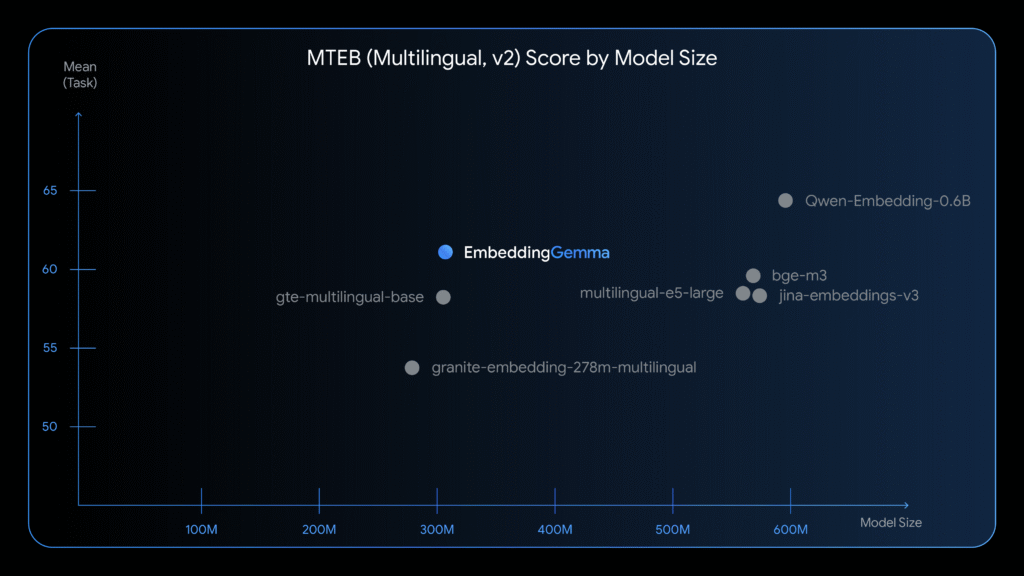

Designed with efficiency in mind, the 308-million-parameter model has already claimed the top spot on the Massive Text Embedding Benchmark (MTEB) for multilingual models under 500 million parameters, establishing a new standard for compact yet high-performing AI tools.

Keep reading, let’s delve into its core features, applications, and how it compares to other solutions in Google’s AI arsenal.

Key Features of EmbeddingGemma

EmbeddingGemma represents a significant leap in making advanced AI accessible on consumer devices without sacrificing performance. Below are some of its standout characteristics:

1. Compact and High-Performing

Despite its relatively small size, EmbeddingGemma delivers performance on par with embedding models nearly twice its parameter count. This allows it to operate efficiently on devices with limited computational resources while still providing best-in-class accuracy.

2. Flexible Output Dimensions

The model supports output embedding dimensions customizable from 768 down to 128, offering developers flexibility depending on their precision and efficiency needs. This adaptability makes it suitable for everything from lightweight mobile apps to more demanding desktop integrations.

3. Optimized for Real-Time Use

With a context window of 2,000 tokens and an inference speed of under 15 milliseconds, EmbeddingGemma is built for real-time applications. It operates seamlessly on smartphones, laptops, and desktops, making it ideal for applications requiring instant feedback.

4. Enhanced Retrieval-Augmented Generation (RAG)

One of EmbeddingGemma’s strongest suits is its ability to significantly improve RAG pipelines. Converting natural language into high-dimensional numerical representations, it enables precise semantic similarity comparisons. This means more accurate retrieval of contextually relevant passages, leading to higher-quality generated responses in applications like AI assistants and search tools.

5. Privacy and Offline Functionality

Since all processing occurs on-device, EmbeddingGemma ensures user data never leaves the local environment. This privacy-first approach is ideal for applications handling sensitive information, while its offline capability guarantees functionality even without an internet connection.

How to Access EmbeddingGemma

Google has made EmbeddingGemma readily available to developers through multiple platforms. The model weights can be downloaded from Hugging Face, Kaggle, and Google’s own Vertex AI.

It is also compatible with a wide range of popular tools and frameworks, including sentence-transformers, LlamaIndex, LangChain, Ollama, and transformers.js, among others.

Comprehensive documentation and integration guides are available on Google’s official AI development portal at ai.google.dev.

EmbeddingGemma vs. Gemini Embedding

Google provides two powerful but distinct tools for text embedding: the on-device EmbeddingGemma and the cloud-based Gemini Embedding API. Your choice between them should be based on the specific demands of your project:

- Choose EmbeddingGemma if you are developing for mobile or edge devices, require offline functionality, prioritize data privacy, or need low-latency real-time inference.

- Opt for the Gemini Embedding API if your application operates at scale on the server side, demands the highest possible embedding quality, and can rely on cloud-based processing.

In short, EmbeddingGemma is tailored for privacy-sensitive and latency-critical scenarios, while the Gemini API serves large-scale, high-performance cloud applications.

Conclusion on EmbeddingGemma

EmbeddingGemma marks a notable advancement in the democratization of on-device AI. By combining high performance with minimal resource use, it allows developers to build sophisticated applications—such as personalized chatbots, domain-specific semantic search systems, and efficient document retrieval tools—without depending on cloud infrastructure.

Its small footprint, multilingual support, and fine-tuning capabilities make it an attractive option for developers worldwide looking to incorporate state-of-the-art embedding technology into consumer-grade devices.

Read More: Google Launches Gemma 3 270M

FAQs About EmbeddingGemma

What is the model size of EmbeddingGemma?

The model contains 308 million parameters. With quantization, its memory footprint can be reduced to under 200MB, making it suitable for most modern mobile devices.

Can I fine-tune EmbeddingGemma?

Yes. Developers can fine-tune the model using custom datasets to improve performance for specialized domains or particular languages.

What languages does EmbeddingGemma support?

The model was trained on data from over 100 languages, offering robust multilingual capabilities for a wide range of geographic and linguistic contexts.

What kinds of applications benefit most from EmbeddingGemma?

It is particularly well-suited for mobile AI assistants, offline search tools, privacy-focused chatbots, and any application requiring efficient and private on-device language processing.