Google DeepMind has launched VaultGemma, a new open-source large language model (LLM) designed with a core emphasis on user data protection.

As the largest openly available language model trained end-to-end using Differential Privacy (DP), this one-billion-parameter release represents a major advancement in building AI systems that prioritize privacy from the ground up.

According to internal evaluations conducted by Google, VaultGemma demonstrates no detectable memorization, substantially reducing the risk of privacy violations and strengthening trust among developers and end-users.

What Is the Architecture of VaultGemma

Built upon the robust Gemma 2 framework, VaultGemma incorporates several architectural adaptations tailored for differential privacy requirements.

Key specifications include:

- Parameter Size: 1 billion

- Layers: 26

- Transformer Type: Decoder-only

- Activation: GeGLU, with a feedforward dimension of 13,824

- Attention Mechanism: Multi-Query Attention (MQA) supporting 1,024 tokens

- Normalization: RMSNorm in a pre-norm setup

- Tokenizer: SentencePiece with a vocabulary of 256K tokens

A notable design decision involved shortening the sequence length to 1,024 tokens. This adjustment helps mitigate the high computational demands associated with DP training, facilitating the use of larger batch sizes while maintaining manageable operating costs. The model offers a formal privacy guarantee of ε ≤ 2.0 at the sequence level, reflecting a strong standard of data protection.

Key Features of VaultGemma

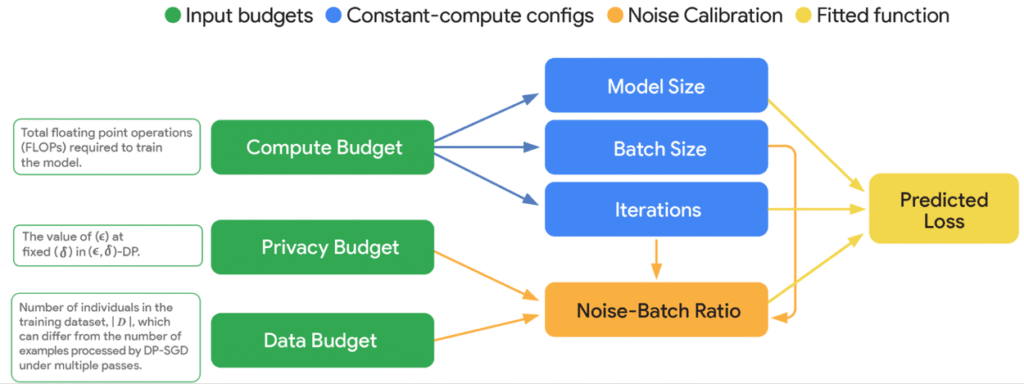

One of the most significant challenges in differentially private training has been the compromise between privacy and model utility. Google’s team employed novel DP scaling laws to navigate this trade-off, creating a structured approach to balancing computational resources, noise injection, and final model performance.

These guidelines enabled the efficient training of VaultGemma on Tensor Processing Unit (TPU) clusters, using batch sizes that occasionally reached into the millions of examples. The result is a model that not only meets stringent privacy criteria but also remains functionally competitive for its size and class.

Who Is VaultGemma For?

VaultGemma is particularly suited for industries that operate under strict data governance regulations, such as healthcare, finance, and legal services. Its architecture provides a verifiable safeguard against data exposure, making it appropriate for applications involving sensitive or non-public information.

In line with Google’s effort to promote accessible and secure AI, the company has released VaultGemma under an open-source license. The model weights, along with relevant code libraries, are accessible on platforms like Hugging Face and Kaggle.

This transparency allows researchers and developers to further explore, adapt, and implement privacy-preserving language technologies in their own projects.

Conclusion on VaultGemma

Google DeepMind’s release of VaultGemma marks a pivotal advancement in the development of privacy-aware AI systems. While its performance is akin to that of non-private models from several years ago, its mathematical privacy guarantees set a new precedent in the field. This model not only provides a practical solution for privacy-sensitive applications but also establishes a foundation for future innovation in ethical and secure artificial intelligence.

By openly sharing its design and training methodology, Google encourages collective progress toward AI technologies that are both powerful and respectful of user privacy—a crucial balance as language models become increasingly integrated into daily life.

You Might Also Like: Google Introduces EmbeddingGemma